DATA TO THE MASSES

A seminar on data reduction was held at the Audio Engineering Society's convention in New York last October. Ken Pohlmann chaired the seminar, which included comments from various system developers, recorded examples of the various systems, and general comments. I was a panel member and, as such, represented the community of recording engineers. My comments, in question and answer form, are presented in this month's column.

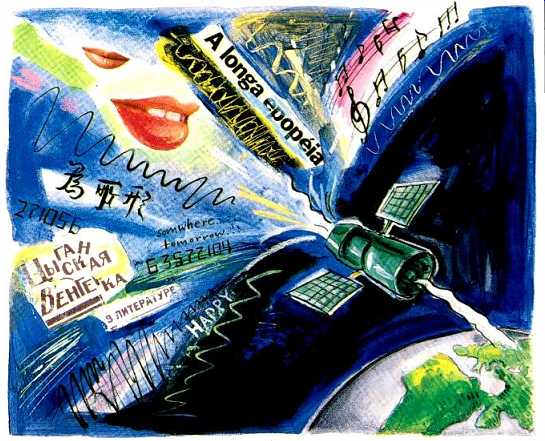

What are the commercial and economic requirements of a given data-reduction system? What is the primary application, when will it be on line, and what degree of data reduction is required? Most of the proposed systems are vying for broadcast acceptance and use in program distribution systems where channel capacity is already over crowded. There is only room for so many geosynchronous satellites, and in not too many years all available in formation capacity will be used up.

There is a clear need for data reduction. For speech, the requirements may be minimal, but for music, great care has to be exercised in certifying a system or hierarchy of systems.

The Philips and Sony proposals for DCC and Mini Disc, respectively, are based on data reduction, but they may be considered, in some respects, as closed systems in that the user will normally access them via their analog inputs and outputs. The quality requirements are set by the company to satisfy an established standard for the nonprofessional user. A typical question that may be asked is: Will the DCC meet or exceed the established subjective standards for Dolby B or Dolby S cassettes? Only Philips can answer that question at this point.

Most of the proposed systems have as a target a four-to-one reduction in data. Some systems have been demonstrated that have greater data reduction, but they are generally relegated to speech applications.

How are psycho-acoustical guidelines developed? Most seminar participants agreed that psychoacoustics must be "virtually re invented" if it is to be useful in evaluating proposed data-reduction systems.

The vast bulk of published data on masking, for example, dates from the 1930s and was based largely on sine-wave and noise signals. Today there are wider bandwidth systems, better transducers, and new kinds of music.

Engineers are also more sophisticated and can bring better measurement instruments to bear on the problem. They are also probably better able to identify specific population groups who may be more attuned to specific problems in a given data-reduction algorithm.

Whose ears are to be trusted? Should a system be designed for "non-audibility" for a general population or for a specialized population? There is no doubt that the engineers who work on data-reduction systems will be able to hear relatively small artifacts arising from a given algorithm. After all, they know what to listen for.

The big question is, how will the artifact sound to groups oriented more toward music or music recording? Remember the CBS Copy-code affair of a few years ago? Recording engineers as a group were more sensitive to the effect of the proposed notch in the range from 3 to 4 kHz and were very much against it.

Can an algorithm be subjected to impartial scrutiny before it is released? If not, why not? A big problem in the data-reduction game has been the general unavailability of working details of the various algorithms (although this situation has improved somewhat in recent months).

Basically, most of the developers have patent positions they are trying to protect, so it may not be in their best interests to tip their hand. It is almost inevitable that the Achilles' heel of any algorithm will be discovered in time, given enough people listening to and playing with a given system. I think there is agreement that it is better to discover any problems earlier rather than later.

What is the impact of cost on the development of a system? Who deter mines cost/performance trade-offs? It stands to reason that a more complex data-reduction system will per form better than one of relatively little complexity, in that it will be able to accommodate more types of signals more effectively. But an expensive system may not be acceptable in the mass marketplace. Therefore, some body must be responsible for deter mining an operating point along the cost/performance scale. Are these decisions always made in good con science? And whose conscience is it, anyway?

What are the regulatory aspects of data-reduction systems? For DCC and Mini Disc, we can assume that there are no regulatory aspects. But for broadcast applications, there are numerous regulatory organizations to be satisfied. The FCC in the United States and the European Broadcast Union (EBU) come to mind.

Then there are the various state-run broadcast groups around the world, many of whom have come up with specific broadcast proposals.

What may be the biggest concern is the timetable that these organizations will impose on development, testing, and approval cycles. Will the necessary development work have been done by the time an approved system is to go on line? How much work can be done later to "clean up" a marginal system after it has been accepted? Remember past experiences in the approval of broadcast transmission systems in which the best systems were not always the winners.

What are the effects of cascading various systems? Assume that there is a broadcast standard for data reduction and that a DCC recording is being transmitted. A listener at home wishes to record the program via a DCC recorder. What is the net data reduction when the listener replays the tape? This is a pertinent question, and one not easily answered.

It is quite conceivable that a signal-related problem that was unnoticeable in any one of the segments of the chain could become quite obvious in the complex situation just described. Protocols will need to be developed early on that will identify, and possibly correct, any transmission problems.

What about the possibilities of improving transmission standards with the industry working toward agreement on a digital standard? Even within the development frame work of data reduction, there may be some aspects of radio performance whose basic standards can be improved. For example, FM stereo transmission is normally limited to 15 kHz at the high end because of possible problems of interchannel interference.

These problems do not exist as such in digital transmission, and improvements can potentially be made. Certainly there will be improvements in dynamic range and freedom from multi-path distortion, and it would be appropriate to include extended bandwidth as well.

What about future requirements? How crowded are communications channels today, and how crowded will they be in the future? The major concern here is that a data-reduction system that will be adequate for the next 10 years or so will become inadequate by the year 2020. I would hope that by that time the world will be "wired" via fiber optics to such a point that there will no longer be any need to worry!

(adapted from Audio magazine, Mar. 1992)

Also see:

= = = =