G2 Music Codec for Streaming

Music codecs designed for Internet streaming must provide fast encoding and decoding, tolerance to lost data, and scalable audio quality. Fast encoding speed is important for live real-time streaming, particularly at multiple simultaneous data rates. Similarly, decoding must optimize subjective audio quality versus computing resources.

Simple algorithms may yield poor audio results, while high computational complexity may tax the processor and slow the throughput rate.

The RealAudio G2 music codec is an example of a streaming codec. It is a transform codec that uses a combination of fast algorithms and approximations to achieve fast operation with high audio quality. For example, lookup tables or simpler approximations may be used, and prediction (with subsequent correction) may be used instead of iterative procedures. In this way, simultaneous encodings may be performed with reasonable computing power.

Whereas non-real-time data such as text or static images may be delivered via a network such that missing or corrupted data is retransmitted, with real-time audio (and video) it may not be possible for the server to resend lost data when using "best effort" protocols such as UDP. This is particularly problematic for coders that use predictive algorithms. They rely on past data thus when packets are lost, current and future audio quality is degraded. To help overcome this, interdependent data may be bundled into each packet. However, in that case a packet might comprise 200 ms or more of audio, and a lost packet would result in a long mute. Recovery techniques such as repeating or interpolating may not be sufficient.

The RealAudio G2 music codec limits algorithmic dependencies on prior data. It also encodes data in relatively small, independently decodable units such that a lost frame will not affect surrounding frames. In addition, compressed frames can be interleaved with several seconds of neighboring frames before they are grouped into packets for transmission. In this way, large network packets can be used. This promotes efficiency, yet large gaps in decoded audio are avoided because a lost packet produces many small gaps over several seconds rather than a single large gap. Interpolation then uses past and future data to estimate missing content, and thus cover small audio gaps. Overall, the G2 music codec can handle packet loss up to 10 to 15% with minimal degradation of audio quality.

Ideally, codecs provide both a wide frequency response and high fidelity of the coded waveform. But in many cases although a wide frequency response is obtained, the waveform is coded relatively poorly. Alternatively, codecs may support only a narrow frequency response while trying to optimize accuracy in that range; speech codecs are examples of the latter approach. To balance these demands on the bit rate, many codecs aim at a specific bit rate range, and deliver optimal performance at that rate.

However, when used at a lower rate, the audio quality may be less than that of another codec targeted for that low rate.

Conversely, a low bit-rate codec may not be optimal at higher bit rates or its computational complexity may make it inefficient at high rates.

The RealAudio G2 music codec was optimized for Internet bit rates of 16 kbps to 32 kbps to provide good fidelity over a relatively broad frequency response. The algorithm was also optimized for bit rates outside the original design to accommodate rates from 6 kbps to 96 kbps and average frequency responses ranging from 3 Hz to 22 kHz. These frequency responses are average because the codec can dynamically change response according to current data requirements. For example, if a musical selection is particularly difficult to code, the codec dynamically decreases the frequency response so that additional data is available to more accurately reproduce the selected frequency range. With this scalability, the codec can deliver the maximum quality possible for a given connection. RealNetworks G2 players use G2 music encoding. A version of Dolby's AC-3 format is employed if files are encoded for backward-compatibility with older RealPlayers. For speech coding, a CELP-based (Code Excited Linear Prediction) coder is employed.

The SureStream protocol is used by RealNetworks to deliver streaming RealAudio and RealVideo files over the Internet. It specifically addresses the issues of constrained and changing bandwidth on the Internet, as well as differences in connection rate. SureStream creates one file for all connection rate environments, streams to different connection rates in a heterogeneous environment, seamlessly switches to different streams based on changing network conditions, prioritizes key frames and audio data over partial frame data, thins video key frames for the lowest possible data rate throughput, and streams backward-compatible files to older RealPlayers.

Internet connectivity can range from cell phones to high speed corporate networks, with a corresponding range of data transfer rates. A high-bandwidth stream would satisfy users with high-bandwidth connections, but would incur stream stoppages and continual re-buffering for users with low-speed connectivity. To remedy this, scalable streams can use stream-thinning in which the server reduces the bit rate to alleviate client re-buffering. However, when a stream has been optimized for one rate, and then it is thinned to a lower rate, content quality may suffer disproportionately.

Moreover, this approach is more appropriate for streaming video, than it is to audio.

To serve different connection rates, multiple audio files can be created, and the server transmits at the most applicable bit rate. If this bandwidth negotiation is not dynamic, the server cannot adjust to changes in a user's throughput (due to congestion or packet loss). With SureStream, multiple streams at different bit rates may be simultaneously encoded and combined into a single file. In addition, the server can detect changes in bandwidth and make changes in combinations of different streams. This Adaptive Stream Management (ASM) in the RealSystem G2 protocol can thus deliver data packets efficiently. File format and broadcast plug-ins define the ASM rules that assign predefined properties such as "priority" and "average bandwidth" to groups of packets. If packets must be sent again, for example, the packet priorities identify which packets are most important to redeliver. With ASM, plug-ins use condition expressions to monitor and modify the delivery of packets based on changing network conditions. For example, an expression might define client bandwidth from 5 kbps to 15 kbps and packet loss as less than 2.5%. The client subscribes to the rule if that condition describes the client's current network connection. If network conditions change, the client can subscribe to a different rule.

The encoder can create multiple embedded copies at varying discrete bit rates. The server seamlessly switches streams based on the clients' current bandwidth or packet loss conditions. The system must monitor bandwidth and losses quickly. If a bandwidth constriction is not detected quickly, for example, the router connecting to the user will drop packets, causing signal degradation. However, if bandwidth changes are measured too quickly, there may be unnecessary shifting of the stream's bit rate.

Audio Webcasting

The Internet is efficient at sending data such as email from one point to another; this is called unicasting. Likewise, direct music transactions can be accommodated. For some applications, point-to-point communication is not efficient. For example, it would be inefficient to use such methods to send the same message to millions of users because a separate transmission would be required for each one. IP multicasting is designed to overcome this.

Using terrestrial broadcast, satellite downlink, or cable, multicasting can send one copy to many simultaneous users. Much like radio, information can be continually streamed using special routers. Instead of relying on servers to send multiple transmissions, individual routers are assigned the responsibility of replicating and delivering data streams. In addition, multicasting provides timing information to synchronize audio/video packets. This is advantageous even if a relatively few key routers are given multicasting capability. The Real-time Transport Protocol (RTP) delivers real-time synchronized data. The Real-time Transport Control Protocol (RTCP) works with RTP to provide quality-of-service information about the transmission path. The Real-time Transport Streaming Protocol (RTSP) is specifically designed for streaming applications. It minimizes overhead of multimedia delivery and uses high-efficiency methods such as IP multicast. The Resource Reservation Protocol (RSVP) works with RTP or RTSP to reserve network resources to ensure a specific end-to-end quality of service. In this way, music distribution systems can efficiently distribute music to consumers.

The RealSystem G2 supports IP multicast with two types of multicast: back-channel and scalable. Back-channel multicast uses the RealNetwork's Real Delivery Transport (RDT) data packet transport protocol. Data is multicast over UDP that does not guarantee delivery, but RDT includes a back-channel or resend channel. An RTSP or RealNetwork's PNA control channel is thus maintained between the server and the client; this communicates client information to the server. A packet resend option can be employed when multicast UDP packets are lost. In addition, back-channel content can be authenticated, and client connections are logged in the server. G2 back-channel multicast serves all bit rates defined in the SureStream protocol. However, the bandwidth is static and will not dynamically vary according to network conditions. Back channel multicasting is preferred when content quality is important and audience size is limited. It is not suitable for large audiences because of the system resources required for each client connection.

Scalable multicast uses a data channel only (no TCP control channel between server and clients) that is multicast with RTP/RTCP as the data packet transport and session reporting protocol. Two RTP ports are used; one provides data transmission while the other reports on quality of data delivery. Data is delivered over UDP. Client connection statistics are not available during G2-scalable multicasting; however, the server logs all users. Scalable multicasting supports SureStream by multicasting each stream on a unique multicast address/port. The client chooses a preferred stream type, and the bit rate does not dynamically vary as with unicasting. Minimal server resources are required for any size audience; thus, it is preferred for large audience live events. However, there is no recovery mechanism for packet loss. Because G2-scalable multicasting is implemented with standard protocols such as RTP, RTCP, SDP, and SAP, it can inter-operate with other applications that use these protocols.

MPEG-4 Audio Standard

The MPEG-4 standard, as with MPEG-1 and MPEG-2, defines ways to represent audio-visual content. It is also designed for multimedia communication and entertainment applications. Moreover, MPEG-4 retains backward compatibility with its predecessors. However, MPEG-4 is a departure from the MPEG-1 and MPEG-2 standards because it offers considerably greater diversity of application and considerable interoperability with other technologies including other content delivery formats.

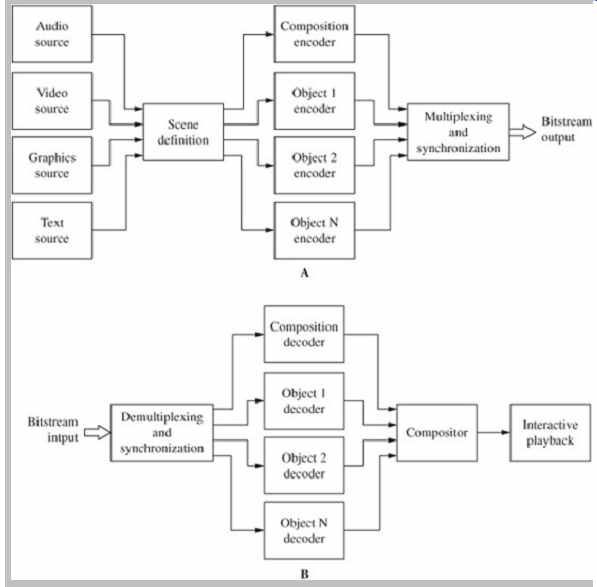

MPEG-4 is a family of tools (coding modules) available for a wide variety of applications including interactive coding of audiovisual objects whose properties are defined with a scene description language. Objects can comprise music, speech, movies, text, graphics, animated faces, a Web page, or a virtual world. A scene description supplies the temporal and spatial relationships between the objects in a scene. MPEG-4 provides an emphasis on very low bit rates and scalability of the coded bitstream that allows operation over the Internet and other networks. MPEG-4 supports high-quality audio and video, wired, wireless, streaming, and digital broadcasting applications. MPEG-4 promotes content-based multimedia interactivity. It specifies how to represent both natural and synthetic (computer-generated) audio and video material as objects, and defines how those objects are transmitted or stored and then composed to form complete scenes. An example of MPEG-4 architecture is shown in FIG. 6. For example, a scene might consist of a still image comprising a fixed background, a video movie of a person, the person's voice, a graphics insert, and running text. With MPEG-4, those five independent objects can be transmitted as multiplexed data streams along with a scene description.

Fig.

6 The MPEG-4 standard provides an object based representation architecture.

A. Encoder. B. Decoder.

Data streams are demultiplexed at the receiver, the objects are decompressed, composed according to the scene description, and presented to the user. Moreover, because the objects are individually represented, the user can manipulate each object separately. The scene descriptions that accompany the objects describe their spatial-temporal synchronization and behavior (how the objects are composed in the scene) during the presentation. MPEG-4 also supports management of intellectual property as well as controlled access to it. As with many other standards, MPEG-4 provides normative specifications to allow interoperability, as well as many non-normative (informative) details. Version 1 of the MPEG-4 standard (ISO/IEC-14496), titled "Coding of Audio-Visual Objects," was adopted in October 1998.

MPEG-4 Interactivity

MPEG-4 uses a language called BInary Format for Scenes (BIFS) to describe and dynamically change scenes. BIFS uses aspects of the Virtual Reality Modeling Language (VRML'97), but whereas VRML describes objects in text, BIFS describes them in binary code. Unlike VRML, BIFS allows real-time streaming of scenes. BIFS allows a great degree of interactivity between objects in a scene and the user. Objects may be placed anywhere in a given coordinate system, transforms may be applied to change the geometrical or acoustical aspects of an object, primitive objects can be grouped to form compound objects, streamed data can be applied to objects to modify their attributes, and the user's viewing and listening points can be placed anywhere in the scene. Up to 1024 objects may comprise a scene.

The user may interact with the presentation, either by using local processing or by sending information back to the sender. Alternatively, data may be used locally, for example, stored on a hard disk. MPEG-4 employs an object-oriented approach to code multimedia information for both mobile and stationary users. The standard uses a syntax language called MSDL (MPEG syntax description language) to be flexible and extensible.

Whereas MPEG-1 and MPEG-2 describe ways to data reduce, transmit, and store frame-based video and audio content with minimal interactivity, MPEG-4 provides for control over individual data objects and the way they relate to each other, and also integrates many diverse kinds of data. However, MPEG-4 does not specify a transport mechanism. This allows for diverse methods (such as the MPEG-2 transport stream, asynchronous transfer mode, and real-time transport protocol on the Internet) to access data over networks and other means. Some examples of applications for MPEG-4 are Internet multimedia streaming (the MPEG-4 player operating as a plug-in for a Web browser), interactive video games; interpersonal communications such as videoconferencing and videophone, interactive storage media such as optical discs, multimedia mailing, networked database services, co-broadcast on HDTV, remote emergency systems, remote video surveillance, wireless multimedia, and broadcasting applications.

Users see scenes as they are authored; however, the author can allow interaction with the scene. For example, a user could navigate through a scene and change the viewing and listening point; a user could drag objects in the scene to different positions; a user could trigger a cascade of events by clicking on a specific object; and a user could hear a virtual phone ring and answer the phone to establish two-way communication. MPEG-4 uses Delivery Multimedia Integration Framework (DMIF) protocol to manage multimedia streaming. It is similar to FTP; however, whereas FTP returns data, DMIF returns pointers directed to streamed data.

There are many creative applications of MPEG-4 audio coding. For example, a string quartet might be transmitted as five audio objects. The listener could listen to any or all of the instruments, perhaps deleting the cello, so he or she could play along on his or her own instrument. In another example, the audio portion of a movie scene might contain four types of objects: dialogue, a passing airplane sound effect, a public-address announcement, and background music. The dialogue could be encoded in multiple languages, using different objects; the sound of the passing airplane could be processed with pitch-shifting and panning, and could be omitted in a low bit-rate stream; the public-address announcement could be presented in different languages and also reverberated at the decoder; and the background music could be coded with MPEG-2 AAC.

MPEG-4 Audio Coding

MPEG-4 audio consolidates high-quality music coding, speech coding, synthesized speech, and computer music in a common framework. It spans the range from low complexity mobile-access applications to high-quality sound systems, building on the existing MPEG audio codecs. It supports high-quality monaural, stereo and multichannel signals using both MPEG-2 AAC and MPEG 4 coding. In particular, MPEG-4 supports very low bit rates; it codes natural audio at bit rates from 200 bps to 64 kbps/channel. When variable rate coding is employed, coding at less than 2 kbps (perhaps with an average bit rate of 1.2 kbps) is also supported. MPEG coding tools such as AAC are employed for bit rates above 16 kbps/channel and the MPEG-4 standard defines the bitstream syntax and the decoding processes as a set of tools. MPEG-4 also supports intermediate-quality audio coding, such as the MPEG-2 LSF (Low Sampling Frequencies) mode. The audio signals in this region typically have sampling frequencies starting at 8 kHz.

Generally, the MPEG-4 audio tools can be grouped according to functionality: speech, general audio, scalability, synthesis, composition, streaming, and error protection. Eight profiles are supported: Main, Scalable, Speech, Synthesis, Natural, High Quality, Low Delay, and Mobile Audio Internetworking (MAUI ).

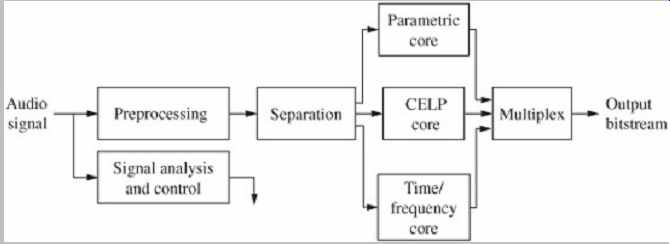

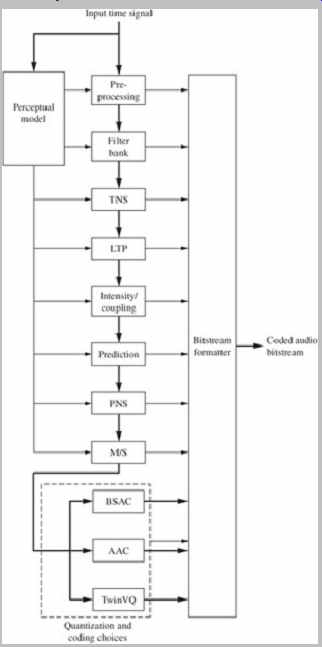

MPEG-4 supports wideband speech coding, narrow band speech coding, intelligible speech coding, synthetic speech, and synthetic audio. Four audio profiles are defined, each comprising a set of tools. The Synthesis Profile provides score-driven synthesis using SAOL (described below), wavetable synthesis, and a Text-to Speech (TTS) interface to generate speech at very low bit rates. The Speech Profile provides a Harmonic Vector eXcitation Coding (HVXC) very-low bit-rate parametric speech coder, and a CELP narrow-band/wideband speech coder. The Scalable Profile, a superset of the speech and synthesis profiles, provides scalable coding of speech and music for networks, such as Internet and Narrow-band Audio DIgital Broadcasting (NADIB). The bit rates range from 6 kbps to 24 kbps, with bandwidths between 3.5 kHz and 9 kHz. The Main Profile is a superset of the other three profiles, providing tools for natural and synthesized audio coding. The general structure of the MPEG-4 audio encoder is shown in FIG. 7. Three general types of coding can be used. Parametric models are useful for low bit-rate speech signals and low bit-rate music signals with single instruments that are rich in harmonics. CELP coding uses a source vocal tract model for speech coding, as well as a quantization noise spectral envelope that tracks the spectral envelope of the audio signal. Time/frequency coding, such as in MPEG-1 and MPEG-2 AAC can also be used.

Fig. 7 The MPEG-4 audio encoder can use three general classes of coding

algorithms.

Speech coding at bit rates between 2 kbps and 24 kbps is supported by parametric Harmonic Vector eXcitation Coding (HVXC) for a recommended operating bit rate of 2 kbps to 4 kbps and CELP coding for an operating bit rate of 4 kbps to 24 kbps. In variable bit-rate mode, an average of about 1.2 kbps is possible. The HVXC codec allows user variation in speed and pitch; the CELP codec supports variable speed using an effects tool. In HVXC coding, voiced segments are represented by harmonic spectral magnitudes of linear-predictive coding (LPC) residual signals, and unvoiced segments are represented by a vector excitation coding (VXC) algorithm. In CELP coding, two sampling rates, 8 kHz and 16 kHz, are used to support narrow-band and wideband speech, respectively.

Two different excitation modes are used: multipulse excitation (MPE) and regular pulse excitation (RPE). Both a narrow-band mode (8-kHz sampling frequency) and wideband mode (16-kHz sampling frequency) are supported. The synthetic coding is known as synthetic natural-hybrid-coding (SNHC). Some of the MPEG-4 audio coding tools are shown in Table 5.

Table 5: A partial list of MPEG-4 audio coding tools.

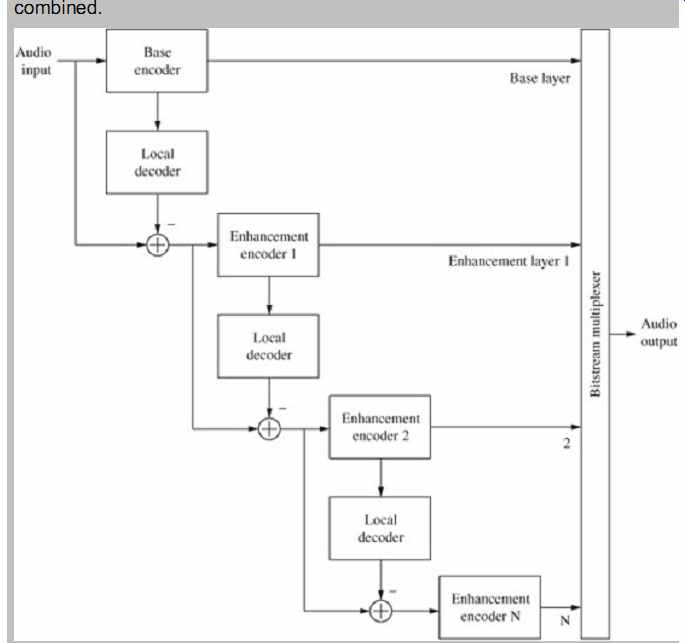

MPEG-4 provides scalable audio coding without degrading coding efficiency. With scalability, the transmission and decoding of the bitstream can be dynamically adapted to diverse conditions; this is known large-step scalability. Speech and music can be coded with a scalable method in which only a part of the bitstrea is sufficient for decoding at a lower bit rate and correspondingly lower quality level. This permits, for example, adaptation to changing transmission channel capacity and playback with decoders of different complexity. Bitstream scalability also permits operations with selected content. For example, part of a bitstream representing a certain frequency spectrum can be discarded. In addition, one or several audio channels can be singled out by the user for reproduction in combination with other channels. Scalability in MPEG-4 is primarily accomplished with hierarchical embedding coding such that the composite bitstream comprises multiple subset bitstreams that can be decoded independently or combined.

Fig. 8 --- The MPEG-4 standard permits scalability of the audio bitstream.

A base layer is successively recoded to generate multiple enhancement layers.

As shown in FIG. 8, the composite bitstream contains a base-layer coding of the audio signal and one or more enhancement layers. The enhancement layer bitstreams are generated by subtracting a locally decoded signal from a previous signal to produce a residual or coding error signal. This residual signal is coded by an enhancement encoder to produce the next partial bitstream. This is repeated to produce all of the enhanced layers. A decoder can use the base layer for basic audio quality or add subsequent enhancement layers to improve audio quality.

Scalability can be used for signal-to-noise or bandwidth improvements, as well as monaural to stereo capability.

Although MPEG-4 imposes some restrictions on scalability, many coding configurations are possible. For example, either the Transform-domain Weighted INterleaved Vector Quantization (TwinVQ) or AAC codec could be used for both base and enhancement coding, or a TwinVQ or CELP codec could be used for the base layer and AAC used for enhancement layers. To permit scalability, the bitstream syntax of MPEG-4 differs somewhat from that of MPEG-2. The coding allows pitch changes and time changes, and is designed for error resilience.

MPEG-4 defines structured audio formats and an algorithmic sound language in which sound models are used to code signals at very low bit rates. Sound scene specifications are created and transmitted over a channel and executed at the receiver; synthetic music and sound effects can be conveyed at bit rates of 0.01 kbps to 10 kbps. The description of postproduction processing such as mixing multiple streams and adding effects to audio scenes can be conveyed as well.

As with other content, audio in MPEG-4 is defined on an object-oriented basis. An audio object can be defined as any audible semantic entity, for example, voices of one or more people, or one or more musical instruments, and so on. It might be a monaural or multichannel recording, and audio objects can be grouped or mixed together. An audio object can be more than one audio signal; for example, one multichannel MPEG-2 audio bitstream can be coded as one object.

MPEG-4 also defines audio composition functions. It can describe aspects of the audio objects such as synchronization and routing, mixing, tone control, sample rate conversion, reverberation, spatialization, flanging, filtering, compression, limiting, dynamic range control, and other characteristics.

MPEG-4's Structured Audio tools are used to decode input data and produce output sounds. Decoding uses a synthesis language called Structured Audio Orchestra Language (SAOL, pronounced "sail"). It is a signal processing language used for music synthesis and effects post-production using MPEG-4. SAOL is similar to the Music V music-synthesis languages; it is based on the interaction of oscillator models. SAOL defines an "orchestra" comprising "instruments" that are downloaded in the bitstream, not fixed in the decoder. An instrument is a hardware or software network of signal-processing primitives that might emulate specific sounds such as those of a natural acoustic instrument. "Scores" or "scripts" in the downloading bitstream are used to control the synthesis. A score is a time-sequenced set of commands that invokes various instruments at specific times to yield a music performance or sound effects.

The Synthetic Audio Sample Bank Format (SASBF) describes a bank of wavetables storing specific instruments that can be reproduced with a very low data rate. The score description is downloaded in a language called Structured Audio Score Language (SASL). It can create new sounds and include additional control information to modify existing sounds. Alternatively, MIDI may be used to control the orchestra. A "wavetable bank format" is also standardized so that sound samples for use in wavetable synthesis may be downloaded, as well as simple processing such as filters, reverberation, and chorus effects.

MPEG-4 defines a signal-processing language for describing synthesis methods but does not standardize a synthesis method. Thus, any synthesis method may be contained in the bitstream. However, because the language is standardized, all MPEG-4 compliant synthesized music sounds the same on every MPEG-4 decoder. MPEG-4 also defines a text-to-speech (TTS) conversion capability.

Text is converted into a string of phonetic symbols and the corresponding synthetic units are retrieved from a database and then concatenated to synthesize output speech. The system can also synthesize speech with the original prosody (such as pitch contour and phoneme duration) from the original speech, synthesize speech with facial animation, synchronize speech to moving pictures using text and lip shape information, and alter the speed, tone, and volume, as well as the speaker's sex and age. In addition, TTS supports different languages. TTS data rates can range from 200 bps to 1.2 kbps. Some possible applications for TTS include artificial storytellers, speech synthesizers for avatars in virtual-reality applications, speaking newspapers, and voice-based Internet.

Scalability options mean, for example, that a single audio bitstream could be decoded at a variety of bandwidths depending on the speed of the listener's Internet connection. An audio bitstream can comprise multiple streams of different bit rates. The signal is encoded at the lowest bit rate, and then the difference between the coded and original signal is coded.

MPEG-4 Versions

Version 1 of MPEG-4 was adopted in October 1998.

Version 2 was finalized in December 1999 and adds tools to the MPEG-4 standard, but the Version 1 standard remains unchanged. In other words, Version 2 is a backward-compatible extension of Version 1. In December 1999, Versions 1 and 2 were merged to form a second edition of the MPEG-4 standard. A block diagram of the MPEG-4 general audio encoder is shown in FIG. 9.

Long-term prediction (LTP) and perceptual noise substitution (PNS) tools are included along with a choice of AAC, BSAC, or TwinVQ tools; these differentiate this general audio encoder from the AAC encoder.

Version 2 extends the audiovisual capabilities of the standard. For example, Version 2 provides audio environmental spatialization. The acoustical properties of a scene such as a 3D model of a concert hall can be characterized with the BIFS scene description tools.

Properties include room reverberation time, speed of sound, boundary material properties such as reflection and transmission, and sound source directivity. These scene description parameters allow advanced audiovisual rendering, detailed room acoustical modeling, and enhanced 3D sound presentation.

Version 2 also improves the error robustness of the audio algorithms; this is useful, for example, in wireless channels. Some aspects address the error resilience of particular codecs, and others provide general error protection. Tools reduce the audibility of artifacts and distortion caused by bit errors. Huffman coding for AAC is made more robust with a Huffman codeword reordering tool. It places priority codewords at regular positions in the bitstream; this allows synchronization that can overcome bit-error propagation. Scale-factor bands of spectral coefficients with large absolute values are vulnerable to bit errors so virtual codebooks are used to limit the value of the scale-factor bands. Thus large coefficients produced by bit errors can be detected and their effects concealed. A reversible variable-length coding tool improves the error robustness of Huffman-coded DPCM scale-factor bands with symmetrical codewords. Scale-factor data can be decoded forward or backward. Unequal error protection can also be applied by reordering the bitstream payload according to error sensitivity to allow adapted channel coding techniques. These tools allow flexibility in different error-correction overheads and capabilities, thus accommodating diverse channel conditions.

Fig. 9 --- Block diagram of the MPEG-4 general audio encoder with a choice

of three quantization and coding table methods. Heavy lines denote data paths,

light lines denote control signals.

Version 2 also provides music and speech audio coding with low-delay coding (AACLD). LD is useful in real-time two-way communication where low latency is critical; the total delay at 48 kHz is reduced from 129.4 ms to 20 ms.

However, coding efficiency is moderately diminished, very generally, by about 8 kbps/channel compared to the AAC main profile. Viewed another way, the performance of LD coding is comparable to that of MPEG-1/2 Layer III at 64 kbps/channel. In LD, the frame length is reduced from 1024 to 512 samples, or from 960 to 480 samples, and filter bank window size is halved. Window-switching is not permitted; a look-ahead buffer otherwise used for block switching is eliminated. To minimize pre-echo artifacts in transient signals, however, TNS is permitted along with window-shape adaptation. A low-overlap window is used for transient signals in place of the sine window used for nontransient signals. The use of the bit reservoir is constrained or eliminated entirely. Sampling frequencies up to 48 kHz are permitted.

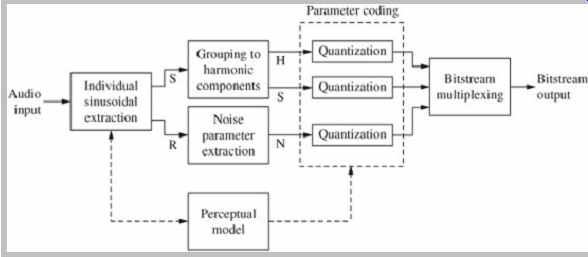

Version 2 defines a Harmonic and Individual Lines plus Noise (HILN) coding tool. HILN is a parametric coding technique in which source models are used to code audio signals. An encoder is shown in FIG. 10. Frames of audio samples are windowed and overlapped; frame length is typically 32 ms at a 16-kHz sampling frequency. The signal is decomposed into individual sinusoids and output as sinusoidal parameters and a residual signal. The model parameters for the component's source models are estimated; the sinusoidal parameters are separated into harmonic and sinusoidal components, and the residual signal is estimated as a noise component. For coding efficiency, sinusoids with the same fundamental frequency are grouped into a single harmonic tone while other sinusoids are treated individually. Spectral modeling, for example, using the frequency response of an all-pole LPC synthesis filter, is used to represent the spectral envelope of the harmonic tone and noise components. The components are perceptually quantized; to decrease bit rate, only the most perceptually significant components are coded. HILN is used for very low bit rates of typically 4 kbps to 16 kbps with bit-rate scalability. With HILN coding, playback speed or pitch can be altered at the decoder without requiring a special-effects processor. HILN can be used in conjunction with the HVXC speech codec.

Figure 10: The MPEG-4 standard, Version 2 defines an HILN parametric audio

encoder in which source models are used to code audio signals.

Version 2 allows substitution of Bit-Sliced Arithmetic Coding (BSAC), sometimes known as bit-plane coding, in AAC. BSAC is optimally used at high bit rates of 48 kbps to 64 kbps/channel, and is less bit-efficient at low bit rates.

BSAC replaces Huffman coding of quantized spectral coefficients with arithmetic coding to provide small-step scalability. A sign-magnitude format is used, and the magnitudes are expressed as binary integers up to 13 bits in length. The bits are spectrally processed in bit-plane slices from MSB to LSB significance. Bit slices are entropy-encoded using one of several BSAC models. A base layer contains bit slices of spectral data within an audio bandwidth defined by bit rate as well as scale factors and side information. Enhancement layers add LSB bit slice information as well as higher frequency bands providing greater audio bandwidth. Data packets of consecutive frames are grouped to reduce the overhead of the packet payload. Using the bitstream layers, the decoder can reproduce a multiple resolution signal scalable in 1 kbps/channel increments.

Version 2 also allows substitution of Transform-domain Weighted INterleaved Vector Quantization (TwinVQ) coding in AAC. TwinVQ uses spectral flattening and vector quantization and can be used at low bit rates (for example, 6 kbps/channel). Version 2 can also improve audio coding efficiency in applications using object-based coding by allowing selection and/or switching between different coding techniques. Because of its underlying architecture and fixed-length codewords, TwinVQ provides inherently good error robustness.

The High-Efficiency AAC (HE AAC) extension uses spectral bandwidth replication (SBR) to improve AAC coding efficiency by 30%. HE AAC is backward compatible to AAC and is sometimes used at bit rates of 24 kbps or 48 kbps/channel. The SBR tool was approved in March 2003. Other extensions include the use of parametric coding for high-quality audio, and lossless audio coding. HE AAC is also known as aacPlus.

The High-Definition AAC (HD AAC) extension provides scalable lossless coding. It provides a scalable range of coding resolutions from perceptually coded AAC to a fully lossless high-definition representation with intermediate near-lossless representations. The input signal is first coded by an AAC core-layer encoder, and then the scalable lossless coding algorithm uses the output to produce an enhancement layer to provide lossless coding.

The two layers are multiplexed into one bitstream.

Decoders can decode the perceptually coded AAC part only or use the additional scalable information to enhance performance to lossless or near-lossless sound-quality levels. In the scalable encoder, the audio signal is converted into a spectral representation using the integer modified discrete cosine transform (IntMDCT). AAC coding tools such as middle/side (M/S) and temporal noise shaping (TNS) can be applied to the coefficients in an invertible integer manner. An error-mapping process maintains a link between the perceptual core and the scalable lossless layer. The map removes the information that has already been coded in the perceptual path so that only the IntMDCT residuals are coded. The residual can be coded using two bit-plane coding processes: bit-plane Golomb coding and context-based arithmetic coding, and a low-energy mode encoder. Lossless reconstruction is obtained when all of the bit planes are decoded, and lossy reconstruction is obtained (but superior to the AAC core decoding only) if only parts of the bit planes are decoded.

MPEG-4 Coding Tools

The MPEG-4 standard encompasses previous MPEG audio standards. In particular, within MPEG-4, MPEG-2 AAC is used for high-quality audio coding. As noted, MPEG-4 adds several specialized tools to MPEG-2 AAC to achieve more efficient coding. A perceptual noise substitution (PNS) tool can be used with AAC at bit rates below 48 kbps. Using the approach that "all noise sounds alike," PNS can efficiently code noise-like signal components with a parametric representation. This helps overcome the inefficiency in coding noise because of its lack of redundancy. PNS exploits the fact that noise-like signals are not perceived as waveforms, but by their temporal and spectral characteristics. When PNS is employed, instead of conveying quantized spectral coefficients for a scale-factor band, a noise substitution flag and designation of the power of the coefficients are conveyed. The decoder inserts pseudo-random values scaled by the proper noise power level. PNS can be used for stereo coding because the pseudo-random noise values are different in each channel, thus avoiding monaural localization. However, PNS cannot be used for M/S-stereo coding.

MPEG-4 also provides a long-term prediction (LTP) tool to be used with AAC. It is a low-complexity prediction method similar to the technique used in speech codecs. It is particularly efficient for stationary harmonic signals and to a lesser extent for nonharmonic tonal signals. In this tool, forward-adaptive long-term prediction is applied to preceding frames of spectral values to yield a prediction of the audio signal. The spectral values are mapped back to the time domain, and the reconstructed signal is analyzed in terms of the actual input signal. Parameters for amplitude scaling and delay comprise a prediction signal. The prediction and input signals are mapped to the frequency domain and subtracted to form a residual (difference) signal. Depending on which provides the most efficient coding on a scale-factor band basis, either the input signal or the residual can be coded and conveyed, along with side information.

As noted, MPEG-4 also provides TwinVQ as an alternate coding kernel in MPEG-2 AAC. It is used for bit rates ranging from 6 kbps to 16 kbps/channel for audio including music; it is often used in scalable codec designs.

TwinVQ first normalizes the spectral coefficients from the AAC filter bank to a target range, then codes blocks of coefficients using vector quantization (sometimes referred to as block quantization). The normalization process applies LPC estimation to the signal's spectral envelope to normalize the amplitude of the coefficients; the LPC parameters are coded as line spectral pairs. Fundamental signal frequency is estimated and harmonic peaks are extracted; pitch and gain are coded. Spectral envelope values using Bark-related AAC scale-factor bands are quantized with vector quantization; this further flattens the coefficients. Coefficient coding is performed. Coefficients are interleaved in frequency and divided into subvectors; this allows a constant bit allocation per subvector.

Perceptual shaping of quantization noise may be applied.

Finally, subvectors are vector quantized. Quantization distortion is minimized by optimizing the choice of codebook indices. The TNS and LTP tools may be used with TwinVQ.

The MPEG-4 audio lossless coding (ALS) tool allows compression and bit-accurate reconstruction of the audio signal with resolutions up to 24 bits and sampling frequencies up to 192 kHz. Forward-adaptive linear predictive coding is employed using, for example, the Levinson-Durbin autocorrelation algorithm. The encoder estimates and quantizes adaptive predictor coefficients and uses the quantized predictor coefficients to calculate prediction residues. The residues are entropy-coded with one of several different Rice codes or other coding variations. The coded residues, as well as side information, such as code indices, predictor coefficients, and CRCC checksum, are combined to form the compressed bitstream. The decoder decodes the entropy-coded residue and uses the predictor coefficients to calculate the lossless reconstruction signal.

The Internet Streaming Media Alliance (ISMA) has specified a transport protocol for streaming MPEG-4 content. This protocol is based on RTSP and SDP for client-server hand shaking and RTP packets for data transmission. MP4 and XMT files are used for interoperability between authoring tools and MPEG-4 servers. MPEG-4 content can be conveyed in various ways such as via Internet RTP/UDP, MPEG-2 transport streams, ATM, and DAB. FlexMux, using elements defined in recommendation H.223, is a multiplexing tool defined by MPEG-4.

MPEG-7 Standard

The MPEG-7 standard is entitled "Multimedia Content Description Interface." Its aim is very different from the MPEG standards that describe compression and transmission methods. MPEG-7 provides ways to characterize multimedia content with standardized analysis and descriptions. For example, it allows more efficient identification, comparison, and searching of multimedia content in large amounts of data. MPEG-7 is described in the ISO/IEC 15938 standard; Version 1 was finalized in 2001.

Many search engines are designed to use database management techniques to find text and pictures on the Web. However, this content is mainly identified by annotative, text-based metadata that describes outward aspects of works such as keywords, title, author or composer, and year of creation. Creation of this metadata is often done manually, and a text-based description may not adequately describe all aspects of the file.

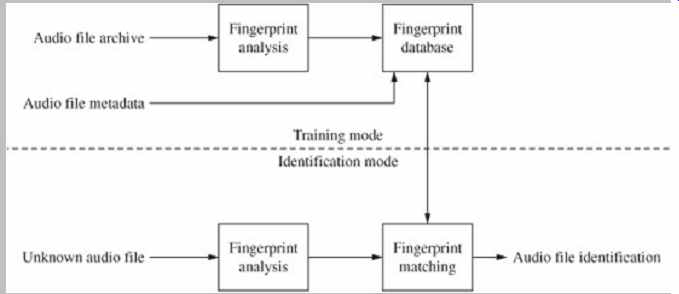

MPEG-7 describes more intrinsic characteristics in multimedia and allows content-based retrieval. In the case of audio, MPEG-7 provides ways to analyze audio waveforms and manually and automatically extract content based information that can be used to describe and classify the signal. These descriptors are sometimes called feature vectors or fingerprints. The process of obtaining these descriptors from an audio file is called audio feature extraction or audio fingerprinting. A variety of descriptors may be extracted from one audio file. Descriptors might include key signature, instrumentation, melody, and other parameters that may be derived from the content itself.

Audio signals are described by low-level descriptors.

These include basic descriptors (audio waveform, power), basic spectral descriptors (spectrum envelope, spectrum spread, spectrum flatness, spectrum centroid), basic signal parameters (fundamental frequency, harmonicity), temporal timbral descriptors (log attack time, temporal centroid), spectral timbral descriptors (harmonic spectral centroid, harmonic spectral deviation, harmonic spectral spread, harmonic spectral variation, spectral centroid), spectral basis representations (spectrum basis, spectrum projection), and silence descriptors. Other descriptors include lyrics, key, meter, and starting note. Musical instrument sounds can be classified in four ways as harmonic, sustained, coherent; percussive, nonsustained; nonharmonic, sustained, coherent; and noncoherent, sustained. In addition to music, sound effects and spoken content could also be described.

Descriptors also describe information such as artist and title, copyright pointers, usage schedule, user preferences, browsing information, storage format, and encoding. The standard also provides description schemes written in XML. They specify which low-level descriptors can be used in any description as well as relationships between descriptors or between other schemes. For example, description schemes can be used to characterize timbre, melody, tempo, and nonperceptual audio signal quality; these high-level tools can be used to compare and query music.

The MPEG-7 standard also provides a description definition language based on the XML Schema Language; it defines the syntax for using description tools. The language allows users to write their own descriptors and schemes. Binary-coded representations of descriptors are also provided. The standard does not specify how the waveform is coded; for example, the content may be either a digital or analog waveform. As with many other standards, although MPEG-7 includes standardized tools, informative instructions are provided for much of the implementation. This allows future improvement of the technology while retaining compatibility. Some parts of the standard such as syntax and semantics of descriptors are normative. MPEG-7 allows searches using audiovisual descriptions. For example, one could textually describe a visual scene as "the witches in Macbeth" to find a video of the Shakespeare tragedy, sketch a picture of the Eiffel Tower to find a photograph of the structure, or whistle a few notes to obtain a listing of recordings of Beethoven's Ninth Symphony. In addition to a search and retrieval function, MPEG-7 could be applied to content-based applications such as real-time monitoring and filtering of broadcasts, recognition, semi-automated editing, and playlist generation. There is no MPEG-3, MPEG-5, or MPEG-6 standard.