by DAN SWEENEY

In 1977, when consumer awareness of digital audio was still almost nil, a seminar on the subject aroused widespread interest in another part of the sound industry--that relatively small segment concerned with meeting the needs of the hearing impaired. The seminar, held in West Berlin, was part of an annual international meeting of industry people known as the Congress for Hearing Professionals, where a number of papers were read concerning the application of digital audio technology to hearing aids. The topic has more recently begun to excite those involved in consumer audio namely digital signal processing (DSP). DSP, it was assumed at the time, would provide the key to precise and independent calibration of all performance parameters relating to compensating amplification of sounds in the environment. Gain, frequency response, and output level in hearing aids could be manipulated within the digital domain--presumably--with no noise penalty, and with none of the artifacts that inevitably accompany analog signal processing. With so much power and flexibility at his disposal, the dispensing audiologist could provide each patient with the very best fit, and could apply truly selective amplification, thus eliminating the problems of unnaturally loud background noise and uncomfortably intense transient reproduction which have plagued hearing aid wearers since the dawn of electronically amplified aids more than 60 years ago.

In 1977, such discussions were purely academic. The electronic devices available then were far too bulky and inefficient to permit the construction of a digital hearing aid of reasonable size, and, in any case, the techniques of digital encoding in use at the time were still, in some measure, experimental. But the promise was there, and the need was certainly there also.

In the past two years, the promise has begun to be fulfilled as the first digital aids have appeared on the market. Today digital audio circuitry seems likely to effect as big a revolution in the hearing aid field as it has in consumer audio, though the course and the impact of the digital revolution in hearing aids is and will be very different from what occurred in the music industry.

The hearing aid field is one segment of audio electronics that most of us who are involved in music reproduction usually forget. As a group we tend to pride ourselves on our hearing acuity, and the phenomenon of hearing impairment involves the unthinkable, the loss of our ability to make extremely fine aural discriminations. And yet the very large majority of us will suffer noticeable hearing impairment in time, not because it is the human condition to suffer loss of hearing--for surely it is not--but simply because we live in environments where our ears are continually insulted. And, of course, those of us who make our living in some area of the music business are doubly at risk, since we are frequently exposed to high intensity sounds in the normal course of practicing our professions, very often by our own hands.

Hearing aid technology--audio prosthetics if you will--offers those of us afflicted with the unthinkable at least a partial solution. It also offers us food for thought, because audiologists have often been far in advance of consumer audio manufacturers in conducting meaningful psychoacoustic research. Hearing aid researchers were discussing transient distortion in the early 1950s and the effect of outer ear structure on localization back in the '70s. For the hearing aid specialist, such matters were literally health issues, and the insights these researchers gained on human hearing, both normal and impaired, should be of interest to anyone involved in audio. Moreover, the current and future involvement of the hearing aid industry with DSP will surely have ramifications touching on every area of the audio industry.

Why Digital? The appeal of digital audio to the manufacturers of hearing aids is quite different from that which digital exerts on the three other segments of the audio industry where it has become established, namely consumer audio, recording, and telecommunications. In both music reproduction and telephony, digital encoding is attractive chiefly because it protects the signal against losses during transmission. In the hearing aid field, on the other hand, electrical signal losses are not especially significant. A hearing aid is, in essence, nothing more than a miniature P.A. system with microphone, amplifier, and speaker (see hardware sidebar). The amplifiers in use today in hearing aids are of high-fidelity standards in regard to noise and distortion. Digitizing the signal at the amplifier stage would have little effect in lowering the residual noise floor of the circuitry.

The real reason behind the push for digitization is the hope that by powerful digital analysis and signal processing, hearing aids can be made to do a better job in compensating for hearing loss. This statement implies that analog aids have serious limitations in performance, and indeed they do. Simply stated, the conventional analog aids of today do not produce a good approximation of normal hearing in even mildly hearing-impaired persons.

The hearing-aid industry agrees that hearing aids, by and large, do not restore hearing losses nearly as successfully as, for example, contact lenses compensate for simple myopia. Indeed, the analogy of corrective lenses is so unflattering to the hearing-aid industry that a more proper comparison would be to artificial limbs-both better than nothing, but not truly equivalent to the normally functioning natural organ.

Here, I am describing the typical nondigital, nonprogrammable hearing aids generally in use today, not the handful of digital or hybrid aids just now appearing on the market, which may be seen as challenging and superior to the conventional aid. I would stress that current analog aids, for all their limitations, are highly sophisticated devices and are the culmination of nearly 70 years of continuous research and engineering. The analog microphones, amplifiers, and earphone transducers available today in hearing aids are superbly linear but aren't likely to become significantly better. Indeed, an audiologist from the 1940s would feel that most of the long-term design goals of the pioneering hearing-aid engineers have been met by current designs.

Yet a high proportion of hearing impaired persons cannot adapt to conventional hearing aids, while most successful wearers complain of poor speech intelligibility at times and difficulty in localizing sounds.

To understand why conventional aids fail, it is necessary to understand something about the problems they aim to correct. The majority of persons seeking relief through electronic hearing aids suffer from simple sensorineural losses, the same liability that afflicts nearly all of us to some small degree. The cause of the hearing loss is the destruction of some of the cilia in the middle ear through progressive exposure to traumatic noise levels (see sidebar on hearing loss and hearing aids.) The trauma-inducing stimulus could be a few gunshots at the practice range, years of working in a noisy factory, or playing music it a band. But whatever the cause, a common cluster of symptoms tends to manifest itself.

First, the losses tend to be frequency dependent, with the more severe losses generally occurring in the upper frequencies. Second, the dynamic range of sounds tolerable to the individual is compressed. For example, the person may have a 20 dB loss at 4 kHz which entails a 20 dB higher threshold of audibility at that frequency, yet the maximum intensity level the person can tolerate remains the same as before-let's say 105 dB. All told, the individual's effective range of perception has been cut by 20 dB in that frequency range-a phenomenon known as recruitment or "accelerated growth of loudness" as Edgar Villchur describes it. A third common symptom is an inability to follow a conversation in the presence of background noise when a hearing aid is being used. Finally, most of the sensori-neurally impaired have problems in localizing sounds, particularly when they are using their aids.

It is easy to conclude from the briefest examination of the problems of the hearing impaired that the design model of the hearing aid as a miniature P.A. system is wholly inadequate. A P.A. system ideally is linear in both frequency and gain.

All frequencies get the same boost, and loud input signals are amplified just as much as soft ones. Such linearity does not meet the needs of the individual with mild to moderate sensorineural losses. He hears all load sounds and most soft sounds perfectly well. He only has problems in a certain frequency range, though it happens that that range is generally where the voiced consonant sounds occur, and consequently he tends to have problems understanding speech. A linear frequency response restores the high frequencies for consonants, but at the price of overwhelming bass and midrange, while a linear gain amplifies quieter sounds but makes ordinarily loud sounds into excruciatingly loud ones.

Historically, hearing-aid manufactures were slow to address these problems with appropriate signal processing, but almost all hearing aids made today use at least some form of signal processing, broadband equalization and single-band compression at the most basic level. Even with this technology, most hearing-aid wearers still experience problems-problems that appear to be beyond the solution of simple tone controls and wideband compression, thus the interest in DSP and extensive individual programming options.

Self-Contained Amplification and Signal Processing

If digitization is relatively new to the hearing-aid field, sophisticated circuit design and penetrating investigations into psychoacoustics are not. Hearing aids have always presented extraordinary design challenges to their manufacturers, and throughout the history of the device, engineers and audiologists have responded by employing the most advanced audio technology available at the time.

Electric hearing aids, themselves, are just about as old as the century. But before 1900, the hearing impaired might resort to hearing trumpets-large, clumsy horns that could provide only marginally better acoustical coupling of the eardrum to the atmosphere, but no practical prosthesis existed.

Early electrical hearing aids were essentially adaptations of the telephone. A carbon microphone modulated an electrical current within a battery-powered circuit, and the current in turn activated an earphone that used an electromagnetic transducer. No amplification occurred within the electrical circuit, so the transduction process resulted in a net loss of energy. Furthermore, the carbon transducer was highly nonlinear, and overall frequency response was extremely peaky. And yet, the tightly fitting earpiece did transfer acoustical energy very efficiently to the eardrum, and carbon hearing aids provided up to a 50-dB increase in acoustical pressure at the eardrum in the midrange.

This carbon aid was, by modern standards, a cumbersome device, with separate external microphone, battery pack, and earphone, but it did provide some slight relief to mildly hearing-impaired persons, and its superiority to the hearing trumpet was undeniable. It was also relatively cheap, a factor that kept it on the market until the late 1940s, long after more advanced technology was widely available.

Shortly after the carbon aid appeared-in 1906, in fact-Lee De Forest invented the triode amplifying tube, ushering in the electronic age. In less than a decade the triode would be widely used in radio transmission, and engineers in other areas of communications technology were beginning to explore its potential as well. Hearing aids represent one of the very first consumer applications of the triode amplifying tube, and the earliest vacuum tube hearing aid was developed in 1921 by Earl Hanson. Several others were available by the end of the decade.

Early electronic aids were really not portable, and were limited to desktop use. The amplifying circuitry alone occupied a box the size of a table radio. The pressure for miniaturization in other areas of electronics eventually led to smaller tubes and batteries and the introduction of truly portable electronic aids in the late-'20s. The first wearable electronic aids were still quite bulky by present standards, requiring two separate cabinets one for the batteries and another for the microphone and amplifier. The cabinets were not concealable but were worn externally like camera equipment. A single earpiece took the output of the amp. Such hearing aids were known as "body aids" because the housings containing the guts of the systems were generally worn on the upper body, or alternately carried in a coat pocket. At the very end of the decade, a few aids appeared with battery and amp in one housing-the form almost all hearing aids would retain until the 1960s.

-------DIGITIZED AUDIO CIRCUITRY SEEMS LIKELY TO EFFECT AS BIG A REVOLUTION IN THE HEARING AID FIELD AS IN CONSUMER AUDIO.-------

---------Ungainly acoustic horns, in a variety of shapes and sizes,

provided 10 to 20 dB of gain and required no batteries.

above: Even without tubes or transistors, the amplification of carbon

microphone aids, and their tight coupling to the ear, effectively increased

average sound pressure at the ear by anywhere from 10 to 50 dB.

Prewar electronic aids were expensive, and in the depression-ridden '30s they did not sell in large numbers. Indeed, prior to World War II most electronic hearing aids produced were essentially experimental and appeared in small pilot runs. But in the aftermath of World War II, the electronic hearing-aid industry exploded.

During the war years, the U.S. military--perhaps inevitably--evinced little concern for protecting the hearing of its troops, and thousands of young men returned from the war with premature hearing losses from the effects of explosions and aircraft engine noise. Veterans' benefits provided these men with the means of paying for the still expensive electronic aids, and the Veterans Administration undertook an extensive research program on hearing loss and the means of treating it. The tube-amplified body aid came into its own.

World War II itself had given tremendous stimulus to vacuum tube technology, and a number of ultra miniature tubes had been developed that proved godsends to the hearing-aid industry. (It is no accident that in the aftermath of the war both high fidelity and musical instrument amplifiers developed rapidly as well.) But the effects of war-related hearing research were equally important to the development of the hearing aid, and they were to some extent detrimental.

Early research studies published by the Veterans Administration and Harvard University stated that linear amplification was preferable to compensatory equalization, and that bilateral aids provided no performance advantage over monaural. Current studies have come up with sharply different results in both areas, and a couple of reasons may be given for the discrepancies. The cues by which persons localize are better understood today than in the '40s so more appropriate tests can be devised. Also, modern researchers are able to evaluate an aid's real response at the eardrum-not just its acoustic output in free air. At any rate, during the '40s and '50s, most audiologists accepted as valid the findings of the Harvard and government research.

The general acceptance of the findings of the Harvard and Veterans Administration researchers can certainly be explained by the prestige of the bodies sponsoring the research. But the lack of any vigorous dissent is still somewhat puzzling because by this time hearing aid manufacturers, as well as dispensing audiologists, were well aware that hearing loss is nearly always frequency dependent and that recruitment poses a serious problem for a good portion of the hearing impaired. In fact, both compression and equalization were offered in a few hearing aids of the early '50s in spite of a lack of solid research to support such measures, and in spite of the extreme difficulty in executing the signal processing circuitry with vacuum tubes contained in a cabinet the size of a deck of cards.

The fullest development of signal processing lay in the future, however, and before hearing aids could incorporate the hundreds of electrical components necessary to execute sophisticated signal processing, the transition from vacuum tubes to transistors would have to occur. Interestingly, this transition would take place within the hearing aid industry almost as soon as production transistors were available.

Indeed it occurred before transistors were used to any extent in computers, and also long before they appeared in consumer audio components. The first hybrid transistor hearing aid appeared in 1952, and the first fully transistorized unit followed a year later. By the mid-'50s tubes were passé. The heat, size, and inefficiency of tubes were simply unacceptable in the hearing aid field. The attraction of transistors was irresistible, not only by virtue of their greater efficiency, but because they were much smaller and were much less conspicuous.

For a period of about 20 years from the early '50s, when transistors first appeared, to the early '70s, the hearing aid manufacturers concentrated their energies on reducing the size of the physical plant, and secondarily on improving transducers. Signal processing was generally given short shrift.

The very first transistors were relatively bulky components with pins and sockets like miniature vacuum tubes, and their primary appeal was on the basis of their higher efficiency, not their size. But by the late '50s, when the first integrated solid-state circuits were developed, hearing aid manufacturers could begin to contemplate an inconspicuous substitute for the traditional body aid.

The miniaturization of hearing air circuitry was a gradual process, and the first ICs did not permit the production of hearing aids that could be concealed in the ear canal as has been achieved in some present-day designs. The first non-body aids instead took the form of eyeglass hearing aids with the circuitry and microphone concealed in the frame of a pair of false eyeglasses and an earphone extending from one of the arms. Later, behind-the ear aids appeared with the circuitry contained within a molded enclosure that fit directly in back of the external ear. Still later, in-the-ear aids were developed that put everything within an ear-mold fitting into the opening of the ear canal. In-the ear-canal aids, the last to appear, represent the ultimate in miniaturization and concealment.

As a matter of interest, in-the-ear aids are the dominant type at present. The more recent in-the-canal aids push miniaturization to the limits of present technology and suffer from a tendency to feedback which miniaturization alone cannot solve.

In the meantime, as transistors and ICs got smaller, transducers and amplifiers improved at a steady pace.

As we have seen, early hearing aids used carbon transducers, but these were later replaced by electromagnetic devices. By the early-'50s, the balanced armature type earphone had become the industry standard and remains so to this day. Modern balanced armature transducers have a frequency range of roughly 50 Hz to 10 kHz, while greatly exceeding the efficiency of the moving-coil transducers used in consumer headphones.

(Balanced armature driver elements may in fact approach 80% efficiency.) Electret transducers are generally conceded to offer slightly better fidelity, but their low efficiency has ruled out their use in practical con sumer products.

Microphones in modern high performance hearing aids are almost invariably of the electret type and have been since the late-'60s. In some cases, the capsules are of the same type used in music recording.

Most authorities on hearing aid design feel that current microphones are very highly developed, and that extreme improvements in performance are unlikely in the foreseeable future.

Hearing aid amplifiers, for the most part, are of the same general design as those used in high-fidelity applications. Several stages of voltage amplification are used, followed by a power-output stage that runs in AB mode. Class A hearing aid amplifiers have been made, but low efficiency has militated against their general adoption. Experiments have also been conducted with floating power-supply rails and Class D operation, both proven techniques for improving efficiency over AB operation. Etymotic Research's new K-Amp chip, manufactured by Knowles, uses a Class D power amplifier.

---------HEARING IMPAIRMENT IS ONE SEGMENT OF AUDIO THAT MOST OF US INVOLVED IN MUSIC REPRODUCTION FORGET ABOUT. FOR US IT IS UNTHINKABLE.---------

=============

The Basics of Hearing Aid Hardware At the most fundamental level, modern hearing aids may be regarded as miniaturized public address systems (perhaps "private address system" would be an apt term). With the singular exception of the cochlear implant aid, all hearing aids consist of the following: A microphone to pick up the sounds in the listener's environment and convert them into electrical impulses, an electronic amplifier, and a loudspeaker. An internal battery is included to power the unit.

Except in the case of the bone conduction type (see below), a custom-made earpiece seats the loudspeaker in the ear canal. In most modern aids, the earpiece holds the amplifier and microphone in addition to the loudspeaker. If the earpiece (containing all of the hearing aid components) extends into the external structure of the ear, the aid is called an in-the-ear aid, while if the earpiece is contained entirely within the ear canal, it is an in the-ear-canal aid.

In hearing aid nomenclature, the loudspeaker is referred to as a receiver, or, more rarely, as an earphone. A couple of types of hearing aids do not use receivers in the usual sense. Bone conduction hearing aids use a special type of transducer to excite vibrations in the skull behind the ear. Bone conduction aids exhibit poor frequency response and fidelity, and are only used with patients with deformities of the outer ear or severe ear canal drainage problems.

Cochlear implants are surgically implanted devices that do not amplify sound at the eardrum but function almost as surrogate ears. Electrical impulses generated in the device stimulate the auditory nerves directly, bypassing the organs of the middle and inner ear. Cochlear implants are only recommended for patients with profound hearing loss verging on total deafness and must be regarded as palliatives at best. The device itself was developed by 3M, which still controls the market for cochlear implants.

Most present-day hearing aids are compact units with all components contained within the earpiece. Less common are behind-the-ear aids where the electrical circuitry is placed in a curved module located directly behind the ear, while the microphone and receiver reside in the earpiece.

A variant on this approach is the eyeglass aid where the electronics are placed in the frame of a pair of false eyeglasses (this type has practically disappeared from the market). The oldest and least popular form of hearing aid today is the body aid where electronics occupy a small box worn against the body. Progress in chip design has rendered the body aid almost obsolete with the one significant exception discussed in the text.

==============

Signal Processing: The Focus of Current Research

Hearing aids, like high-fidelity systems for the home, are ultimately assemblages of components.

Transducers are bought from one manufacturer, amplifiers from another, signal processing circuits from still another. The parts are then assembled by the company whose brand name the final product bears.

A few companies supply the raw components used in hearing aids, but many companies compete in the market. Not surprisingly, a similar level of performance tends to be encountered at specific price points.

Nevertheless, the hearing aid field is competitive, and most of the competition and innovative research among manufacturers tends to center on signal processing circuits. This, as we have seen, is where digitization comes into play.

Generally, high-performance hearing aids, however complex their circuitry may be, use only five kinds of signal processing-variable gain, equalization, compression, limiting, and steady-state noise reduction. Since only the last involves what might be termed intelligent circuitry, the question arises as to why digitization would be deemed necessary or desirable, especially inasmuch as DSP circuitry requires more power to operate than analog signal processing electronics.

In fact, only one hearing aid on the market, the Nicolet Phoenix, actually converts the main signal into a digital code. Several other high-performance aids are of the hybrid type, however, using digital circuits to exercise control functions on the analog signal. These include the Audiotone/Miracle Ear Dolphin System, the Ensoniq Sound Selector, the Maico/Bernaphon PHOX (Programmable Hearing Operating System), the Resound Personal Hearing System, the 3M Memory Mate, and the Widex Quattro. The filters that perform the signal processing in these devices are purely analog, but the circuits that vary the filter parameters are digital.

There are several reasons behind the move toward digital control circuits. The first is a matter of sheer physical space. The chief benefit in offering a hearing aid with highly flexible signal processing is the achievement of better individual physical fitting. Such flexibility in turn entails a multitude of controls.

There's simply nowhere to put a multitude of controls on a modern hearing aid, and so the programming of the hearing aid necessarily has to be done remotely, and almost certainly by digital means.

A second reason for digitizing control functions is to tie them in with the diagnostic process. Most hearing aid manufacturers touting sophisticated signal processing advocate the use of combined testing and fitting programs run on a personal computer. The program interprets patient response and prescribes compensatory adjustments on the aid itself. Digital control circuitry within the aid can interface easily with the diagnostic computer.

Furthermore, digital control circuits commonly exceed the precision of analog trimmers, enabling the dispenser and the patient to calibrate the aid very accurately.

Finally, the programmable aid makes refitting very easy. Most analog aids have a very limited range of tone controls, and the basic equalization curve is set at the factory per the dispenser's recommendations.

If the setting proves problematic, the aid must be sent back to the factory. On the other hand, a programmable's equalization setting can be changed with a few key strokes.

above: The tube-amplified aids of the 19405 (left) quickly gave

way in the '50s to more compact, transistorized models (right).

Signal Processing Strategies

Compensatory equalization, a component of every digitally programmable system in use today, appears to have been the first signal processing technique applied to hearing aids. Shelving filters limiting gain in the lowest frequencies have been available since the late '40s.

Later, simple, broadband tone controls appeared, and these still characterize most aids today. But the manufacturers of programmable, digitally controlled aids generally offer considerably more flexibility.

The most sophisticated hearing aid equalizer in the industry is currently offered by Ensoniq, a company until now active chiefly in the musical instrument field. Ensoniq's equalizer allows remote calibrations from a diagnostic computer. The equalizer has thirteen bands, with third-octave equalization in all but the two lowest bands where whole octave equalization is provided.

The equalizer developed by Ensoniq is the product of an extensive research program and reflects the company's position that precise equalization is the key to restoring hearing losses prosthetically. According to Christine Christy, an audiologist employed by the company, Ensoniq's own research has demonstrated that with correct equalization little further signal processing such as compression and noise reduction is necessary, al though the Ensoniq aid does include single-band 2:1 compression.

Ensoniq further claims that its hearing aids can be made virtually transparent to persons with normal hearing, a claim also put forth by Etymotic Research, a company with a very different philosophy of signal processing.

No manufacturer of programmables places total reliance on equalization, nevertheless all programmables currently on the market do afford the user some degree of frequency shaping. Typically, only broadband equalization is used, but corner frequency of the bands is generally adjustable, and, in some cases, slope. Most of the programmables also have a single narrow preset filter to cope with the ear canal's natural resonance which occurs at roughly 3 kHz.

Compression and limiting are also very commonly used signal processing techniques among the programmables. These processes allow compensatory amplification while not exceeding a certain absolute sound pressure level, thus tailoring the signal to the dynamic limitations of the recruitment sufferer.

Recall that recruitment entails a rise in the individual's threshold for intelligibility with no corresponding rise in the threshold of discomfort. Compressing the sound to fit within the narrow window of comfort and intelligibility is the obvious answer.

The need for compression was seen as early as the '30s, but the circuitry for accomplishing it in a relatively unobtrusive manner was extremely bulky. Very few of the vacuum tube aids of the early '50s used true compression. Most relied instead on the crude limiting provided by designing the amplifier to have no headroom. A true compressor reduces gain above a certain threshold level and does so only when the threshold is exceeded for a certain predetermined duration.

To avoid unnatural pumping and breathing effects, most compressors are provided with release times that are considerably longer than the attack times. The distinction between compressors and limiters has become rather hazy, but according to Mead Killion, president of Etymotic Research, any device with more than a 4:1 ratio should be considered a limiter rather than a compressor-at least in the context of the hearing aid industry.

A compressor is a rather sophisticated device, and while the circuitry can be, and has been, executed with vacuum tubes, an integrated circuit is an altogether more practical proposition. High dynamic range compressors with 2:1 compression ratios were introduced to the hearing aid industry in the late '60s by an engineer named Hyman Goldberg. Such compressors, termed AGCs (automatic gain controls) in industry parlance, began to become commonplace in hearing aids in the '70s.

The ubiquity of AGC in modern hearing aids is testimony to its effectiveness, but many users complain of unnatural effects from the circuit's operation. Automatic gain control circuits are typically broadband in their operation, and thus they compress dynamic range in the lower frequencies where the aid may provide little or no gain. According to Edgar Villchur, another problem with single band compressors is that they're prone to locking up in the presence of a high-intensity level at a single frequency. Keith Wilson of 3M notes a further defect: Vowels tend to be compressed more than consonants, and due to the slow release characteristics of the compressor the vowels can swamp the voiced consonant sounds. Compressions also tend to do little to improve speech intelligibility or to mitigate the effects of background noise.

Recently, two companies, both of them manufacturers of programmable aids, have introduced aids with dual band compression in an effort to overcome some of the limitations of wideband compression. Dual band compression provides for two separate compressor circuits, the more powerful of which is intended for the upper frequencies where recruitment is ordinarily manifested more strongly.

The two companies currently offering dual band compression are Resound and 3M. (3M combines dual band compression with highly flexible equalization.) A third company, Etymotic Research, has designed a chip that combines single band compression with level-dependent high-frequency boost, which is said to provide the same benefits as true dual band compression.

================

Hearing Loss and Hearing Aids

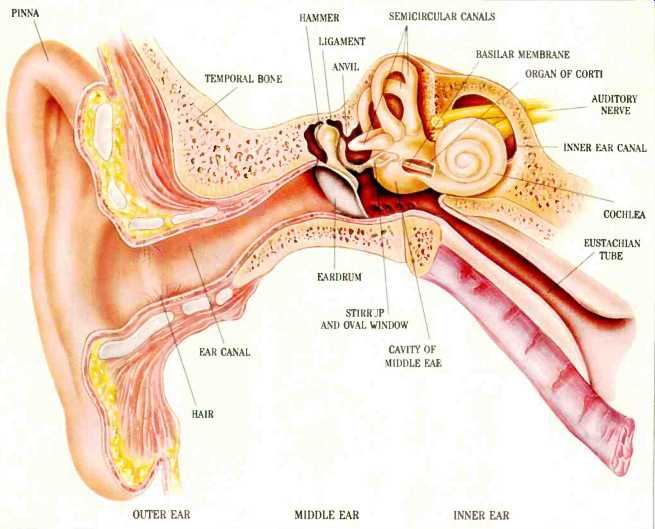

Hearing impairment arises from a multitude of causes, but generally impairments can be placed within two broad groupings conductive losses and sensorineural losses.

The former are mechanical in nature and include deformities of the outer ear and the ear canal, traumas to the eardrum, tissue growth between the inner and outer ear, abnormalities of the bones of the ear, and fluid and wax in the ear. Many types of conductive loss are treatable and are at least partially reversible.

Sensorineural losses, on the other hand, are usually permanent and irreversible. These most commonly involve breakages in the cilia, the tuned hair cells of the inner ear that trigger nerve impulses in response to sound-induced movements in the fluid of the inner ear. Such breakages are generally the result of progressive noise trauma, and over a lifetime the loss in hearing acuity can be considerable.

Generally, victims of sensorineural losses are considered to be better candidates for hearing aids than those persons suffering from conductive losses, because the malformations or lesions in the outer ear, often present in conductive losses, preclude the fitting of an earpiece. In contrast, sensorineural losses are beyond the scope of even the most advanced microsurgery, but they do not in themselves involve any abnormalities of outer ear that would preclude aids.

Diagnosing hearing disorders and fitting the hearing impaired with selective amplification are extremely involved and cannot be described in even the sketchiest fashion here, however one rather common misconception can be dispelled. Correctly fitted hearing aids do not match every decibel of hearing loss with one of gain. For slight to moderate losses the general rule is to counter the losses with one-third gain, while for the most severe losses, the gain ratio may be increased to two thirds.

-D. S.

================

The idea of dual band compression itself is not new. Bell Labs and Edgar Villchur, the holder of numerous patents relating to high fidelity, did basic research on multi-band compression in the '70s, but the circuitry of the period did not permit practical implementations. Modern chip technology does. Both Villchur, who has consulted for Resound, and Killion feel strongly that appropriate compression rather than flexible equalization is the key to effecting broad-gauge improvements in hearing aid performance. Villchur (in a phone interview with the author) opined that dual band compression also ameliorated the "cafeteria effect" where the hearing impaired have difficulty following a single conversation in the presence of background noise.

"People with hearing loss simply receive fewer cues than the rest of us for distinguishing conversation from background noise," says Villchur. "Multi-band compression helps restore those missing cues." But not all researchers agree. Intellitech attacks the background noise problem more directly with a circuit known as the Zeta Noise Blocker. Intellitech is not a hearing aid manufacturer per se but a small engineering and research company that sells .the Zeta Noise Blocker chip to other manufacturers. The Zeta Noise Blocker is a hybrid device. It utilizes analog circuitry exclusively in the signal path, but the monitoring and control circuits are digital and the processes performed by them may be rightly termed digital pattern analysis.

Although the Zeta Noise Blocker is probably the most sophisticated signal processor used in a hearing aid to date, its actual mode of operation is fairly straightforward. The device consists of a group of interrelated circuits. In aggregate these circuits distinguish background noise from conversation and remove noise from the audio signal.

In its first stage the Zeta Noise Blocker divides the signal into a number of frequency bands and then scans the signal content within each band for abrupt changes of amplitude. Typically, speech directed at the listener will exhibit rapid variations in amplitude whereas background noise, including background conversation, will show a more gradual variation in level, and will tend to approach a steady-state condition. The Zeta Noise Blocker identifies the signal content whose envelope appears to represent noise and attenuates the signal level in the frequency bands where noise is apparent. A variable corner frequency is provided for each band to tailor response more effectively. The Blocker monitors the incoming signal continuously, and in the absence of a predetermined noise threshold, the audio signal is not processed.

Because it identifies noise by means of relatively long-term fluctuations in signal level, the Zeta Noise Blocker is ineffective in suppressing impulsive noise. On the other hand, it can separate direct speech from background conversation because the latter tends to exhibit less amplitude variation, though because the two occupy the same frequency bands, the Beta Noise Blocker cannot remove background speech, entirely without interfering with direct speech.

The Zeta Noise Blocker, while unique in its approach, is not the only digital noise suppression system. The Nicolet Phoenix, the world's only fully digital aid, also includes a circuit for suppressing background noise.

above: With ICs, entire aids could be built into eyeglass frames

or hooked behind the ear. This placed the microphones near the user's ears,

where head diffraction and head motion could provide binaural listening

cues.

above: The latest chip technologies allow microphones and electronics

to be mounted in the ear, so spectral directional cuts from the wearer's

pinna and concha are included in the amplified sound.

The Environmental Approach

The primary purpose of programmability is to improve individual fitting, but digitization brings another significant benefit as well, namely the ability to store in memory more than one tuning. Several of the programmables, including the Resound Personal Hearing System, the Widex Quattro, the 3M Memory Mate, and the Nicolet Phoenix, have this capability. The Nicolet Phoenix goes furthest in this regard in that it is provided with three memories storing diagnostically determined equalization, noise reduction, and gain settings for different environments. The user, by taking advantage of the body-worn control module, can change settings at the push of a button.

Such flexibility is impressive, but hearing aid engineers are looking beyond predetermined settings to an adaptive digital hearing aid with enough on-board software to be able to analyze the environmental noise characteristics and recalibrate the aid from moment to moment to maximize speech intelligibility. Considerable progress in both chip design and psychoacoustics will have to take place before hearing aids of such sophistication can even be made in prototype but the benefits of such technology, if it can be perfected, could be inestimable.

Programmable Prospects

Some industry analysts, such as William J. Mahon, Editor of The Hearing Journal, suggest that programmable aids will become the norm sometime in the early '90s, but so far market acceptance has been limited.

Part of the problem is high price. The programmables represent new technology and embody a great deal of proprietary research. The companies who have developed programmables obviously have to charge more to recoup initial development costs.

Another problem has been size. All of the first generation instruments excepting the PHOX were behind-the-ear only. The Phoenix, the only fully digital unit, also utilized a body-worn cable-connected control module. Most of the newer programmable aids will be in-the ear types while Starkey, a leading hearing aid manufacturer, is developing a programmable in-the-canal hearing aid.

But customer resistance to size and pricing aren't the only factors in limiting programmable acceptance, nor do they appear to be the most important. Dispensers themselves are in many cases reluctant to stock programmables because of the high costs of the diagnostic equipment that must be used with them and because of the lack of compatibility between brands. The dispenser who sells a programmable must purchase the unique computerized test equipment and interfaces required for each brand. This effectively restricts him to a single programmable model which puts him at considerable risk if the manufacturer decides to abandon the programmable market.

Some solutions to the last problem may be at hand, however. In Europe, a number of hearing aid manufacturers including Siemens, Philips, Hansaton, Phonak, and Rexton have formed a consortium to develop a mutually compatible programming system to be known as the PMC (programmable multichannel system). The PMC is not intended to be truly universal but will only operate with systems developed according to PMC standards by participating companies.

Gennum, a Canadian company which is a leading supplier of hearing aid ICs, is currently at work on a universal "learning" external programmer that can be used with any digital aid, and this promises to provide the ultimate solution to the compatibility problem.

The gradual implementation of industry standards in programmability will undoubtedly give more dispensers the confidence to offer such aids to their patients. At that point the competing theories as to optimal equalization, compression, and gain characteristics will be tested in the marketplace. Academic studies will begin to supplement proprietary research, and eventually one theory will prevail leaving one or two companies to dominate the digital aid market. Undoubtedly the hearing-impaired consumer will benefit, because the programmable airs do indeed offer a level of signal processing and individual tailoring that isn't possible with the established all-analog technology.

Unfortunately, as in other areas of audio, electronics engineering outruns understanding of the way that human beings process signals with the ear/brain, and the execution of solutions is often clearer than the identification of the problems. The next five years should signal very rapid advances in the hearing research as well as engineering, and perhaps by the mid-'90s the "high-fidelity hearing aid" presumptuously advertised by a number of current manufacturers will actually exist.

(adapted from Audio magazine, Sept. 1990)

Also see:

The only component you can't replace: Ears ( Dec. 1982)

Muffling the Neighbors: Ten Tips to Reduce Noise (Nov. 1990)

The March of Technology: Analog Tape Home Recording (May 1997)

Celebration of Audio's Earliest Years

= = = =