A BIT (OR TWO) BETTER

Early (and some continuing) critics of digital audio focused their concerns on the two fundamentals of that technology: Sampling rate and quantization. They claimed that more of each was required to provide true high fidelity, whatever that is.

Instead of the 44.1-kHz sampling rate and 16-bit samples built into the CD standard, skeptics claimed that, for example, 100 kHz and 20 bits would be much more satisfactory. While there s a certain logic in that argument (in the same sense that instead of $100 in my checking account, $250 would be better), the facts argued slightly otherwise. Now, out of the blue, one major manufacturer has introduced technology which seems to vindicate the claims of the critics.

True, a higher sampling rate provides a higher frequency response. Instead of flat response to 20 kHz, you would find your player flat to 50 kHz.

Unfortunately, while everyone hates to admit it, human ears really aren't that good, and even the SPCA would admit hat there's no sense in wasting all that data on dogs. The only advantage to a higher sampling rate is the decrease in demands on the anti-aliasing filter preceding a digital audio system and the anti-imaging filter following it. The need o sharply limit audio energy at frequencies higher than half the sampling rate dictates the use of brick-wall filters o preserve our 20-kHz audio bandwidth with a 44.1-kHz sampling rate.

And analog brick-wall filters introduce phase nonlinearities.

However, CD players can easily avoid the problem by using oversampling and digital anti-imaging filters; heir phase nonlinearities are then negligible. And even the problem of brick wall filters on the input of professional recorders may soon be eased with the development of oversampling antialiasing filters. In short, an increased sampling rate doesn't buy you much.

Increasing the quantization word length by a few bits may make more sense. A 16-bit word represents 65,536 amplitude increments, an 18 bit word represents 262,144 increments, and a 20-bit word yields no ewer than 1,048,576 choices. What having more increments buys you, primarily, is an increase in S/N ratio. Simultaneously, any quantization artifacts are diminished. Now, frankly, a properly dithered 16-bit system has a plenty good S/N ratio, and quantization artifacts are handled in such a way that resolution may be obtained at levels even below that of the least significant bit. What's more, the cost of true 18-bit A/D and D/A converters is shocking, and 20-bit converters-well, don't ask.

There would seem to be little sense, then, in increasing the word length.

Thus the Compact Disc standards of 44.1 kHz and 16 bits were seemingly carved in stone, never to be tampered with. Until, of course, someone saw a way to improve on perfection. That "someone" is Yamaha: They have introduced a new line of CD players with 18-bit technology. "What?" you ask, "an 18-bit CD player for my 16-bit CDs? Is that kosher? Is it a gimmick, a whole new ball game, or what?" The answer, as usual, lies in a better understanding of exactly what technology has wrought.

Yamaha's intent with the 18-bit technology of their "Hi-Bit" converter is not to somehow improve the data from the disc, but rather to make better use of that data. In other words, the Hi-Bit technology attempts to overcome problems in 16-bit converters which may limit their decoding of the information coming from the disc. An analogy may be made to oversampling: While the sampling rate per se is increased, the method doesn't create new information; it merely attempts to make better use of the existing information.

Not coincidentally, oversampling is what makes an 18-bit conversion possible, by solving the rather obvious problem of how to come up with 18 bits when the output from the disc is only 16. When the 44.1-kHz, 16-bit signal from the CD is oversampled, both the sampling frequency and the number of bits are increased, the former because of oversampling and the latter because of the multiplication which oversampling entails. For example, the output of an oversampling filter may be 176.4 kHz and 28 bits. Normally, only the 16 most significant bits are used, for conversion by a 16-bit D/A converter, and the rest are discarded (though in some CD player designs they are used for noise shaping). The Yamaha Hi-Bit converter uses 18 of the bits from the output of an oversampling circuit, instead of just 16.

Those two extra bits do indeed convey useful amplitude information, albeit at levels below the first 16 bits. The problem is that using these two extra bits nominally requires an 18-bit D/A converter, which would be prohibitively expensive for the mass market. Yamaha's trick is that their Hi-Bit system does not really use an 18-bit D/A converter at all. Rather, it uses a 16-bit converter hooked up in an extremely clever way.

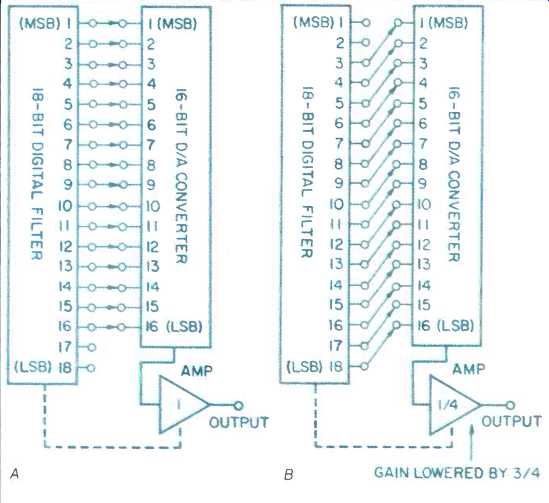

Here's the secret: The 18 bits from the oversampling filter's output are wired through switches to the inputs of a 16-bit D/A converter. When the signal's amplitude is high enough so that all 16 bits are being used to convey it, the upper 16 bits are applied to the 16 bit converter, as usual (Fig. 1A). However, when the signal's amplitude drops to a point where the two upper bits from the oversampling filter are not conveying any information, the 18 bits are shifted downward so that the unused bits are ignored, and the 16 lower bits are utilized instead (Fig. 1B). This adaptive scheme makes sense because in music recording, the two upper bits are rarely used, and then often for only a brief period of time. Through bit shifting, a 16-bit converter may thus handle an 18-bit input.

Of course, you have to compensate for the resulting shifts in amplitude caused by shifting bits. Therefore, the gain of the signal is reduced by three fourths whenever the lower bits are shifted in; an analog gain block downstream of the D/A handles this chore.

Why three-fourths? Because every time you shift the digits of a binary number one place to the left, you double its value. (In the decimal system, shifting the digits one place to the left multiplies a number's value by 10.) A shift of two places (two bits) quadruples the value. Hence, when the lower bits are shifted up, the signal amplitude becomes four times too large, relative to the unshifted portions of the signal, and the output must be attenuated by three-fourths back to its original value.

This adaptive 18-bit conversion system may be considered a noise-reduction scheme, in that the signal is being expanded at the D/A converter. The benefits result from the fact that the residual noise of the converter, as well as its conversion nonlinearities, will be proportionally reduced. Looked at another way, a four-times (12-dB) higher analog output will be achieved without increasing the D/A's residual noise and conversion error. When the gain is reduced by three-fourths to bring things back to normal, the noise and conversion errors are reduced to one-fourth their original levels. You get 12 dB more S/N and only one-fourth as much distortion, both equivalent to what you'd get from using an 18-bit converter. Pretty neat, eh?

Fig. 1--In a quasi-18-bit system, when the signal level is high enough

to use the first two bits (A), the first 16 bits of the 18-bit signal

go directly to a 16-bit D/A converter and the last two bits are ignored.

At lower signal levels (B), only the last 16 bits of the signal go to

the D/A; to compensate, the output gain is reduced to one-fourth normal.

Of course, as with any clever scheme (and in holding with the general law of the universe stating that there's no free lunch), there is a price to be paid for the benefit accrued.

Specifically, as one might guess, it just ain't easy to get the benefits of an 18 bit converter with a 16-bit converter, no matter how tricky your 16-bit converter is. The problem is this: When the bits are shifted, it is difficult to immediately and simultaneously shift the gain of the analog output to compensate. Furthermore, any static offset will become apparent when the switching takes place.

Until the first 14 bits are occupied, the output is four times its nominal amplitude, so the three-fourths attenuator is used to compensate. When all 14 bits are occupied, the output voltage is at its maximum. What happens when the 15th bit flickers on depends on the design. In a simple quasi-18-bit system, the bits would be shifted over by two places and the attenuator kicked out; output would then increase at normal gain until full 16-bit voltage is reached. Alternatively, there could be a-bit shift when the 15th bit goes on and another 1-bit shift when the 16th bit does; in that case, half the attenuation would be kicked out at the first shift, and the rest dropped at the second. Yamaha's Hi-Bit D/A converter, he only quasi-18-bit unit in production hat I know of, uses the second technique.

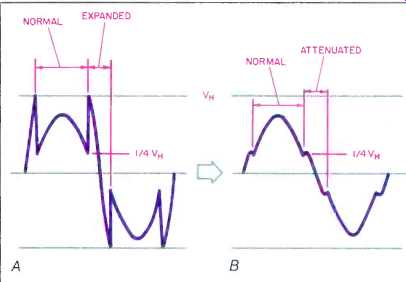

Here is where the luncheon bill is presented. If, due to component tolerances, the attenuator can't reduce the output to precisely one-fourth (or one-half) its shifted value, there will be a glitch in the waveform where the attenuated and non-attenuated signals are joined (Fig. 2). Yamaha had to face that problem when they produced their Hi-Bit D/A converter. Their solution was to combine the attenuator with their player's sample-and-hold (S/H) chip. (The S/H chip transforms the isolated pulses from the D/A into a continuous, stair step waveform, which is then filtered to produce the audio signal.) The resistors in this attenuator (actually a gain switch) are laser trimmed for the highest possible precision, so the gain errors when the attenuator is switched in and out will be as small as the resistance errors in the D/A chip.

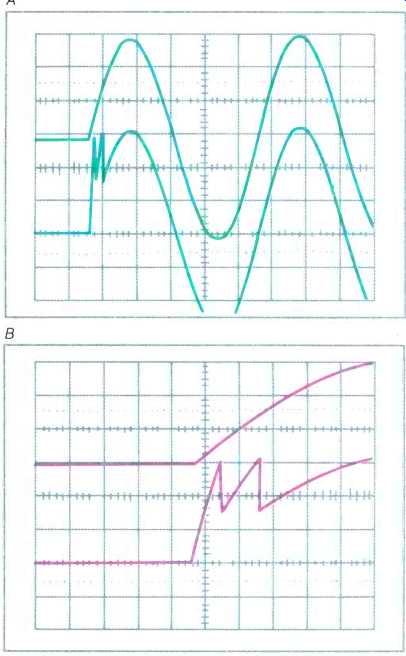

The S/H chip also keeps track of where the attenuator switching occurs and skips the samples which coincide with those transitions. It samples the value before the transition, holds that value until the switching is over, and then picks up with the sample that follows. Thanks to oversampling, which gives us four D/A output samples for every sample in the original recording, no data is lost this way. Judging by oscilloscope traces (Fig. 3) and by reports from those who've heard the system, glitches are not apparent.

Fig. 2--The waveform from a quasi-18-bit D/A (A) has normal and expanded

sections. When expanded sections are attenuated back to normal (B), imperfections

in the attenuator will show up as mismatches between normal and attenuated

portions of each waveform.

Fig. 3--Normal (A) and expanded (B) 'scope traces, showing attenuation

switching and deglitching. Lower trace of each pair shows smoothed output

of D/A converter, without attenuation switching action of sample-and-hold

circuit. The upper trace of each pair shows final output, after the sample

and-hold circuit. Scales: Vertical, 1 V/div.; horizontal, (A)

500 µS/div., (B) 100 µS/div.

This is only a transitional system, as even Yamaha admits. When true 18-bit D/A chips become widely available, we'll probably see them in high-end CD players; when such chips become affordable, we'll probably see them in less expensive players too.

Which brings us back to the golden ear critics who complained in the first place. Will the new Hi-Bit system vindicate, at least in spirit, those who contended that 16 bits just weren't enough, or will it just be two bits of upmanship? That will have to be decided by, naturally, the critics.

(by KEN POHLMANN; adapted from Audio magazine, Oct. 1987)

Also see: Onkyo Acculinear 18-bit CD player (Nov. 1988)

= = = =