by Leonard Feldman

Ever since the first Compact Disc player reached my lab in 1982, it has been clear that measuring the performance of such devices would involve techniques and test signals not normally used in testing analog audio components. The makers of CD players were quick to see that specially made Compact Discs containing test signals would be needed to evaluate their products. These test discs, originally prepared for manufacturers' in-house use, eventually became avail able to testing and service laboratories. But there was little agreement on what signals the discs should contain.

The first test discs I acquired were supplied by the Philips company of Holland, the co-developer of the CD format. Not surprisingly, the other partner in CD development, Sony, came up with a test disc of its own. Shortly thereafter, Sony offered yet a second test disc, which contained some additional signals left off the hurriedly is sued first version. Since then, I have acquired test discs from such diverse sources as the German Hi Fi Institute, the Japan Audio Society, Technics (Matsushita Electric Industrial Co.), and Denon. (In fact, Denon has issued a second test disc for which I was asked to write the liner notes.) Some of these discs include not only test signals but musical selections, ostensibly to tax the capabilities of a CD player or of an entire audio playback system. In addition to the dozen or so discs which these companies offer for testing the electrical performance of CD players, there are discs designed to uncover tracking or error-correction limitations.

While some test signals can be found on more than one of the available test discs, certain signals are unique to only one disc. Clearly, if Test Lab A uses one disc while Test Lab B uses another, their measurements of a given player may or may not be identical.

About 18 months ago, the Electronic Industries Association (EIA) decided to sponsor a committee to develop uniform standards for measuring CD players. John M. Eargle, the well-known recording engineer, design engineer and audio consultant (he heads his own company, JME Associates, and also works with JBL), assumed the chairmanship. The committee has met four times, thus far, and reached some agreement on what should be tested and what test signals would be needed to conduct these tests, but has not yet issued any formal reports or recommendations.

Meanwhile, the Electronic Industries Association of Japan (EIAJ), which has been actively working on the same problem, has issued a complete test standard, in the form of two reports.

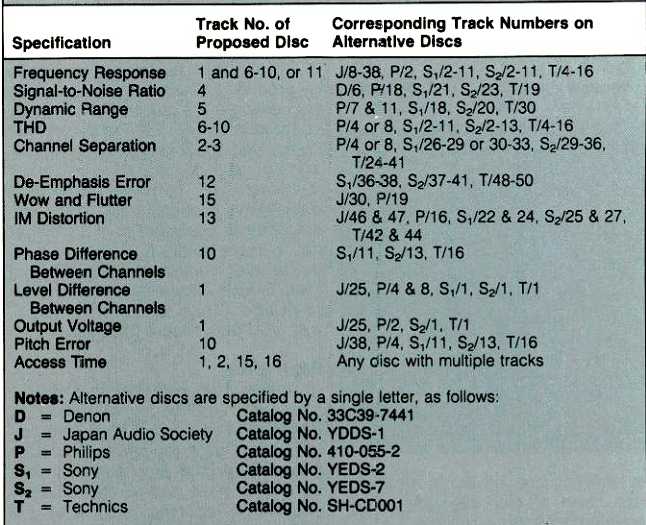

Document No. CP-307, entitled "Methods of Measurement for CD Players," details some 13 individual tests that should be made when evaluating a CD player's performance. The standard tells how to perform the tests and how to report the results in a consistent manner. The EIAJ's document No. CP 308, "Test Disk [sic] for CD Players," describes a standard test disc that should be used in performing the tests listed in CP-307. The disc is to contain 16 tracks, with a total playing time of approximately 63 minutes, but at this time it is not yet available. However, most of the signals that would appear on this disc are available on some of the test discs issued earlier. Table I itemizes the contents of the proposed test disc and which of the 13 tests each test signal is used for. The Table also shows which currently available test discs contain similar or identical test signals. The following paragraphs describe the individual tests, how they are to be performed, and how the results are to be reported.

Frequency Response

As is true of any audio component, frequency response measurements are of primary importance in testing CD players. The methods used to mea sure frequency response of a CD player are much the same as those used for any audio component, except that the test signals are supplied by the test disc instead of a signal generator. Response measurements may be made using either 17 spot frequencies, re corded at maximum (0-dB) level and ranging from 4 Hz to 20 kHz, or by using a sweep-frequency signal that extends from 16 Hz to 20 kHz. According to the proposed EIAJ standard, if the maximum deviation over the range of frequencies tested is less than 1.0 dB, the deviation need not be stated in the manufacturer's published specifications. I suspect, however, that most manufacturers (and, for that matter, most testers and reviewers) will continue to state the deviation in decibels, "plus or minus," as is the usual practice for this specification.

----------------------

From the beginning, makers of CD players knew that test discs would be needed, so they made their own. Now there's a proposal from the EIAJ for a single standard test disc and uniform measurement standards. But do they go far enough?

----------------------

Signal-to-Noise Ratio

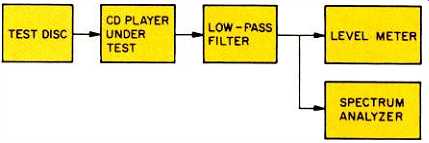

Figure 1 shows the test setup recommended for S/N measurements. The EIAJ approach re solves a couple of questions and does away with a couple of ambiguities that have been bothering me since I began testing CD players. You will notice that both a filter and a weighting network are included in the signal path. The weighting network is specified as having an A-weighted curve; the filter called for has cutoff frequencies of 4 Hz and 20 kHz, with an attenuation of 60 dB or more at 24.1 kHz. (The EIAJ document refers to this both as a band-pass and as a low-pass filter. While its low-end roll-off does make it, technically, a band-pass, the roll-off is so close to d.c. that it can be considered a low pass filter for all practical purposes.) An alternative filter, having a slope of only 18 dB per octave and a cutoff frequency of 30 kHz, is also allowed "unless any question arises in measurement." In the past, I've wondered what to do about out-of-band noise components, which, though inaudible, are nevertheless quite measurable and significant in the absence of filtering and weighting networks. Now, if we can all agree to use A-weighting and the recommended type of filter, we should all come up with consistent S/N figures for CD players. Of course, the reference level from which noise is measured is maximum output level (0 dB), which gives the most favorable S/N number.

Like the S/N test tracks on current test discs, the S/N track on the proposed disc would be digitally recorded as a succession of samples of zero amplitude (16 zeros per sample) over its entire length. This simulates an infinitely attenuated signal.

DynamicRange

I continue to be somewhat perplexed by manufacturers who quote both S/N ratio and dynamic range. Of ten the two figures are the same, but occasionally they differ. The new standard clarifies the term "dynamic range" and dictates a specific way to measure it. Dynamic range is defined as the level of total harmonic distortion plus 60 dB when a 1-kHz signal at 60 dB below maximum (0-dB) recorded level is reproduced. As an example, suppose during playback of a 1-kHz, -60 dB signal you read a THD of 3.0%. Translating that to dB yields -30.5 dB. Adding-30.5 dB to-60 dB results in a figure for dynamic range of 90.5 dB. Once again, band pass and A-weighting filters are to be used in measuring the residual THD for the-60 dB level signal. You can see that dynamic range, as quoted by makers of CD players, need not necessarily be equal to the S/N specification for a given player.

Fig. 1--Test setup for measuring S/N ratio, as recommended by the EIAJ. Using

a "low-pass" filter (see text) eliminates all out-of-band noise from

the measured results.

Fig. 2--Test setup for measuring channel separation, as recommended by the

EIAJ. By using the filter and a spectrum analyzer, only fundamental crosstalk

products will be measured; harmonics will be ignored.

Total Harmonic Distortion

The EIAJ recommends that THD be measured at 14 test frequencies, all reproduced at maximum recorded level. A low-pass filter is inserted in the signal-measurement path, effectively taking care of any out-of-band components that might otherwise upset the readings observed on a distortion me ter. Personally, I would have preferred that the use of a spectrum analyzer without a filter had been proposed, in stead of a distortion meter. The use of spectrum analysis discloses the presence of any non-harmonically related beats, noise, etc., all of which are not, strictly speaking, THD but which may nevertheless be audibly annoying to a listener.

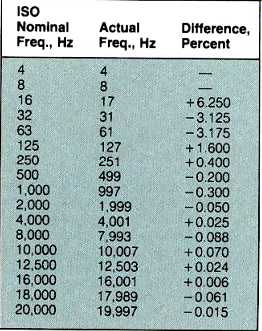

Another important point should be noted about the THD measurement or, more specifically, about the test frequencies used. The EIAJ standard calls for the familiar ISO test frequencies shown in the first column of Table II. The actual frequencies to be found on the proposed standard test disc (and on several existing test discs) are not quite the ISO frequencies; these are also shown in Table II. The differences are small, as the Table shows, but extremely important.

The problem is that certain of the ISO standard frequencies are integral sub-multiples of the CD's 44.1-kHz sampling frequency. While a 16-bit digital encoding system can theoretically have as many as 65,536 (216) distinct codes, representing that many different signal values, the number of distinct codes actually used will be limited when the ratio of the recorded frequency to the CD sampling frequency is an integer. The number of distinct codes is also reduced when the re corded signal level is low--and many test tones are recorded at low levels.

Using too few different codes can cause artificially inflated distortion readings. These would obscure the important differences in D/A linearity which distortion tests are basically de signed to measure.

For example, if a test signal of 315 Hz (1/140 of 44.1 kHz) were used at a level of -20 dB, only 71 different codes would be used in a period of 100 mS. Maintaining the level at -20 dB but shifting the frequency up by only 2 Hz, to 317 Hz (a bit less than 1/139 of 44.1 kHz), would yield 3,282 codes over the same 100 mS! We are indebted here to Robert A. Finger of the CBS Technology Center, who studied this problem and came up with detailed test-frequency recommendations. His findings were transmitted to the EIA's CD measurement standards committee, which, in turn, notified the corresponding committee of the EIAJ.

As a result, the EIAJ's published re ports make due note of the problem, and the frequencies shown in the middle column of Table II are recommended for all future CD test discs, instead of the ISO frequencies shown in the Table's first column. Most of the nominal ISO and actual test frequencies differ by less than 1%. While the true ISO frequencies could be used for frequency response and other measurements not related to distortion, in practice the frequencies for distortion measurements on the proposed test disc will probably be used for these parameters as well.

For stating the THD results, the EIAJ document declared that when no frequency is stated, a test frequency of 1 kHz (actually 998.7 Hz) is to be under stood. Otherwise, the specification should read:

"Total Harmonic Distortion _% or less (_Hz) (EIAJ)."

Channel Separation

I've wondered for some time why I am unable to confirm the consistently high figures of channel separation quoted by most manufacturers. Oh, it's not that my measurements have slighted the CD players (80 to 85 dB of channel separation is hardly inadequate); it's just that I have been unable to measure the 90 and sometimes 95 dB quoted for this spec. If manufacturers have been using the technique out lined in the EIAJ report, I now under stand why the minor discrepancy has existed. In the EIAJ standard, a spectrum analyzer is used to compare the outputs of the two channels when a test signal appears at full (0-dB) amplitude on one of the channels. The standard specifies that in measuring the ratio of the desired and undesired out puts, the "leak" or crosstalk signal should be that of the fundamental frequency alone, and should not include harmonic components. A filter is recommended (Fig. 2) to ensure this. I don't use the filter.

Two methods of specifying separation are suggested. The first simply would state:

"Channel separation _dB or more (EIAJ) "

A frequency of 1 kHz is understood.

The second, more specific method would state the frequency or frequencies at which the separation measurement was made, and would be ex pressed as follows:

"Channel separation _dB or more (_kHz) (EIAJ)."

D-Emphasis Error

The Compact Disc format provides for a system of pre-emphasis and de-emphasis not unlike that used in FM broadcasting. Disc producers have the option of boosting high frequencies during recording, following a fixed curve, and attenuating those high frequencies during playback, automatically, to reduce any residual noise to still lower levels. In order to maintain perfectly flat frequency response when pre-emphasis and de-emphasis are used, it is essential that the de-emphasis curve be the exact reciprocal of the pre-emphasis curve. A track on the proposed CD test disc would contain five test frequencies (125 Hz, 1 kHz, 4 kHz, 10 kHz, and 16 kHz) to determine the amount of de-emphasis error, if any, produced by a given player under test. The de-emphasis error is quoted in dB. If a manufacturer does not state the test frequency, it is assumed to be 10 kHz.

TABLE 1---EIAJ-recommended CD test signals, and currently available test discs

from which they may be obtained.

[Tracks for alternative discs are indicated following the slash (/) identifying the disc. For example, P/19 means use track 19 of the Philips disc as an alternative to the proposed test disc for the particular measurement.]

TABLE II-- ISO standard frequencies compared with recommended test frequencies

for use in CD player performance.

Wow and Flutter

Although most manufacturers have elected to describe the wow and flutter of their CD players as "below measurable limits," the EIAJ standard makes provision for measuring this parameter, however small the resulting figure may be. The proposed test disc would carry a 3.15-kHz steady tone--the frequency normally used to measure wow and flutter in tape decks and analog turntables--and manufacturers are given a choice of publishing the weighted peak figure obtained or simply stating that the wow and flutter is below the measurement threshold. If the latter statement is used, it means that wow and flutter is ±0.001% weighted peak or less.

Intermodulation Distortion

Two types of IM measurement are called for in the EIAJ standard. The first is the familiar SMPTE IM, which involves the use of 60-Hz and 7-kHz signals in a 4:1 ratio. The second IM measurement, the so-called CCIF IM, is measured by using two high-frequency signals (11 and 12 kHz would be available on the proposed standard test disc) and noting the percentage of 1-kHz signal beat appearing in the player's output. Once again, a low-pass filter is inserted in the measurement chain to eliminate any out-of-band components.

Phase Difference Between Channels

This test, as its name implies, simply measures the phase difference be tween two high frequencies, each re corded on opposite channels. If 20 kHz is used, the phase difference between channels, stated in degrees, need not be accompanied by a statement of the test frequency used. This test will easily differentiate between those players which use a single, multiplexed D/A converter and those which use two separate D/A converters. The former will always display a phase difference between left and right channels corresponding to approximately 82° (assuming 20-kHz test signals on each channel), owing to the time delay of about 12 µS between sample D/A conversions from the left- and right-channel digital signals.

Level Difference Between Channels

The purpose of this test is obvious, and the new EIAJ standard requires that it be performed using only a 1-kHz test signal at 0-dB (maximum) recorded level. Results of the test are to be stated in dB, as follows:

"Level difference between channels _dB or less (EIAJ)."

Output Voltage

The specification for output voltage is intended to assist the user when connecting a CD player to other audio components. The EIAJ standard requires that a CD player's output voltage be stated for signals at maximum recorded level. The player's volume or output-level controls, if any, should be set either at maximum or, if the player has a single detent or reference mark on its level control, at that reference level. Output voltage specifications are to include a "plus or minus" tolerance, e.g., "Output voltage, 2.0 ±0.5 V."

Pitch Error

Contrary to popular notion, slight errors of pitch can be introduced during playback of a Compact Disc. To deter mine pitch error, the highest available frequency on the proposed test disc, 20 kHz, is measured with a frequency counter. In reporting pitch error, the difference between the measured result and 20 kHz is expressed as a percentage by using the formula:

(F1 – F0)/ Fo x 100 (%)

Access Time

The only measurement in the entire EIAJ standard that addresses the mechanical rather than the electrical performance of a CD player is access time. Two types are defined: Short ac cess time and long access time.

Short access time is the number of seconds that elapse from the time the play button is pressed (in the middle of a track or in a ready-to-play state) until the next adjacent track begins to play.

Long access time is the number of seconds elapsing from the time the play button is pressed while the first (innermost) track is playing until the time the last (outermost) track begins to play. Both access times are measured with an ordinary stopwatch. In specifications, this measurement is understood to be long access time, unless otherwise stated.

Missing Measurements

As far as it goes, the new EIAJ recommendations do an excellent job of standardizing the way measurements of CD players are to be made and reported. As readers of our "Equipment Profiles" know, however, some of the major differences among CD players are related more to their electromechanical than their purely electrical performance. The ability to track a disc accurately, even if it is scratched or dirty, varies greatly among current models. So, too, does the ability to maintain good tracking in the presence of vibration or mechanical shocks applied to all plane surfaces of a player.

Error-correction capability (which is purely electrical) affects a player's performance in these areas, too. There should also be some sort of test to tell what kind of filtration is in use, and a test to determine phase error within a single channel. The phase test would not be easy to accomplish (no test disc yet has properly accomplished it), but it would quantify a property which many critics blame for unpleasantness in CD sound. Perhaps separate standards for measuring these qualities of CD players are required. In any event, the EIAJ is to be commended for coming up with a workable measurement standard so soon after the introduction of Compact Discs and CD players.

(adapted from Audio magazine, Dec. 1985)

Also see:

How Hot are CDs? Recording levels of CD format (July 1989)

Crest Factors of CDs (Dec. 1988)

Do CDs Sound Different? (Nov. 1987)

Thomson CD Recorder -- Exclusive U.S. Test!!! (Mar. 1990)

= = = =